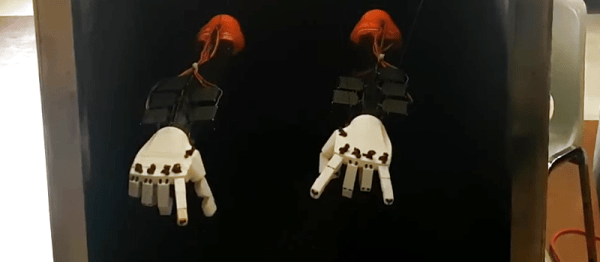

The Hands|On glove looks like it’s a PowerGlove replacement, but it’s a lot more and a lot better. (Which is not to say that the Power Glove wasn’t cool. It was bad.) And it has to be — the task that it’s tackling isn’t playing stripped-down video games, but instead reading out loud the user’s sign-language gestures so that people who don’t understand sign can understand those who do.

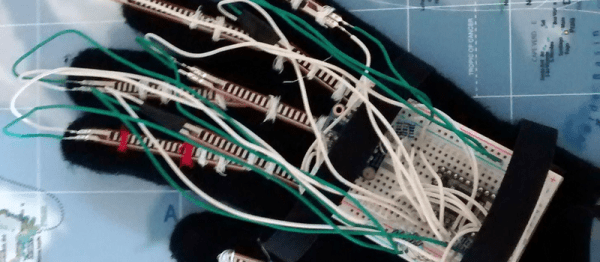

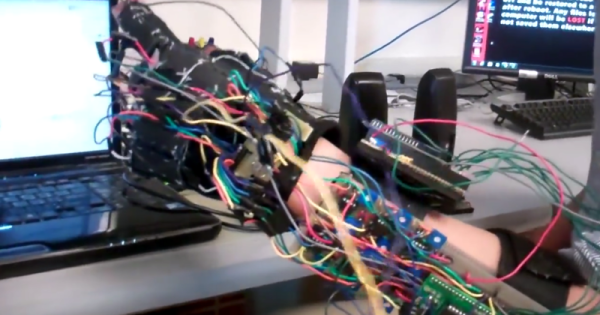

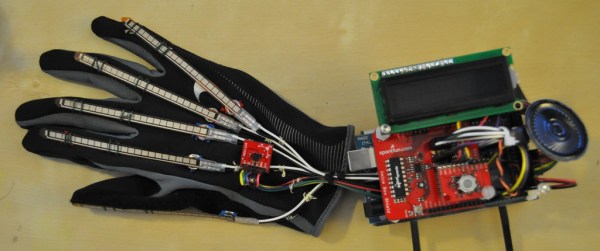

The glove needs a lot of sensor data to accurately interpret the user’s gestures, and the Hands|On doesn’t disappoint. Multiple flex sensors are attached to each finger, so that the glove can tell which joints are bent. Some fingers have capacitive touch pads on them so that the glove can know when two fingers are touching each other, which is important in the US sign alphabet. Finally, the glove has a nine degree-of-freedom inertial measurement unit (IMU) so that it can keep track of pitch, yaw, and roll as well as the hand’s orientation.

In short, the glove takes in a lot of data. This data is cleaned up and analyzed in a Teensy 3.2 board, and sent off over Bluetooth to its final destination. There’s a lot of work done (and some still to be done) on the software side as well. Have a read through the project’s report (PDF) if you’re interested in support vector machines for sign classification.

Sign language is most deaf folks’ native language, and it’s a shame that the hearing community can’t understand it directly. Breaking down that barrier is a great idea, and it makes a great entry in the Hackaday Prize!