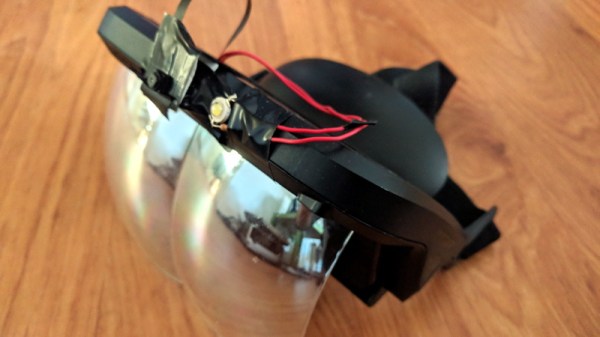

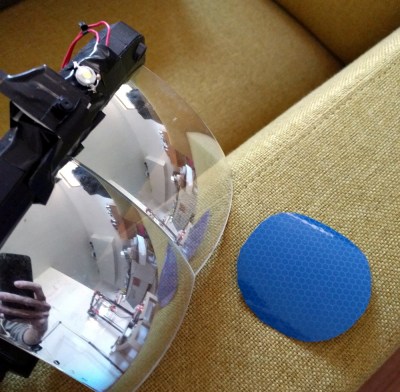

The folks behind the Atmos Extended Reality (XR) headset want to provide improved accessibility with an open ecosystem, and they aim to do it with a WebVR-capable headset design that is self-contained, 3D-printable, and open-sourced. Their immediate goal is to release a development kit, then refine the design for a wider release.

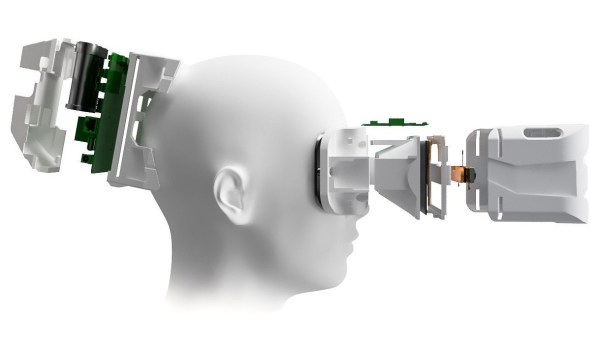

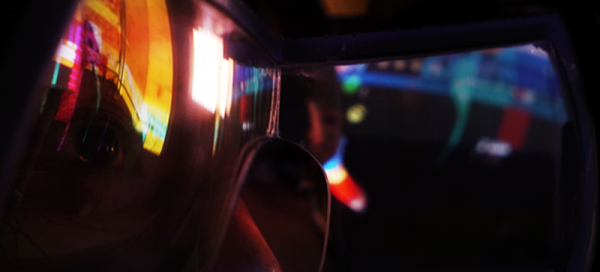

The front of the headset has a camera-based tracking board to provide all the modern goodies like inside-out head and hand tracking as well as the ability to pass through video. The design also provides for a variety of interface methods such as eye tracking and 6 DoF controllers.

With all that, the headset gives users maximum flexibility to experiment with and create different applications while working to keep development simple. A short video showing off the modular design of the HMD and optical assembly is embedded below.

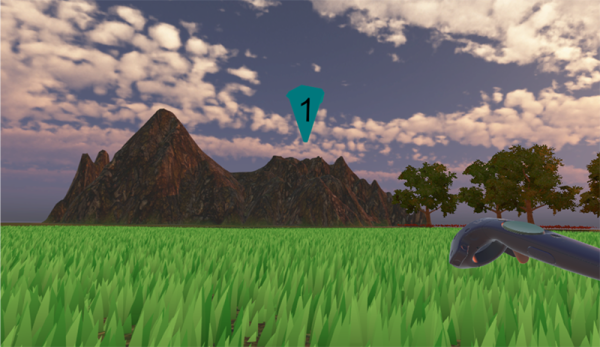

Extended Reality (XR) has emerged as a catch-all term to cover broad combinations of real and virtual elements. On one end of the spectrum are completely virtual elements such as in virtual reality (VR), and towards the other end of the spectrum are things like augmented reality (AR) in which virtual elements are integrated with real ones in varying ratios. With the ability to sense the real world and pass through video from the cameras, developers can choose to integrate as much or as little as they wish.

Terms like XR are a sign that the whole scene is still rapidly changing and it’s fascinating to see how development in this area is still within reach of small developers and individual hackers. The Atmos DK 1 developer kit aims to be released sometime in July, so anyone interested in getting in on the ground floor should read up on how to get involved with the project, which currently points people to their Twitter account (@atmosxr) and invites developers to their Discord server. You can also follow along on their newly published Hackaday.io page.

Continue reading “Open Source Headset With Inside-Out Tracking, Video Passthrough”