Augmented reality (AR) and natural gesture input provide a tantalizing glimpse at what human-computer interfaces may look like in the future, but at this point, the technology hasn’t seen much adoption within the open source community. Though to be fair, it seems like the big commercial players aren’t faring much better so far. You could make the case that the biggest roadblock, beyond the general lack of software this early in the game, is access to an open and affordable augmented reality headset.

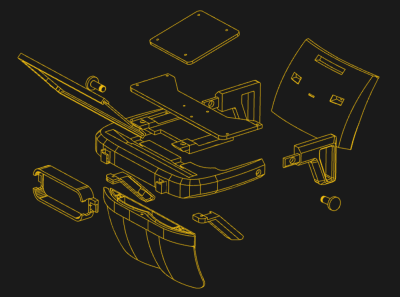

Which is precisely why [Graham Atlee] has developed the Triton. This Creative Commons licensed headset combines commercial off-the-shelf components with 3D printed parts to provide a capable AR experience at a hacker-friendly price. By printing your own parts and ordering the components from AliExpress, basic AR functionality should cost you $150 to $200 USD. If you want to add gesture support you’ll need to add a Leap Motion to your bill of materials, but even still, it’s a solid deal.

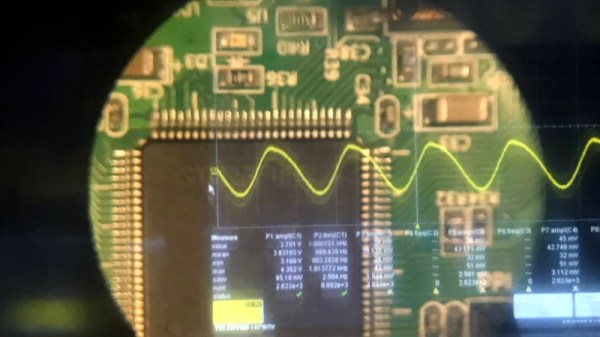

The trick here is that [Graham] is using the reflectors from a surprisingly cheap AR headset designed to work with a smartphone. By combining these mass produced optics with a six inch 1440 x 2560 LCD panel inside of the Triton’s 3D printed structure, projecting high quality images over the user’s field of view is far simpler than you might think.

If you want to use it as a development platform for gesture interfaces you’ll want to install a Leap Motion in the specifically designed socket in the front, but otherwise, all you need to do is plug in an HDMI video source. That could be anything from a low-power wearable to a high-end gaming computer, depending on what your goals are.

[Graham] has not only provided the STLs for all the 3D printed parts and a bill of materials, but he’s also done a fantastic job of documenting the build process with a step-by-step guide. This isn’t some theoretical creation; you could order the parts right now and start building your very own Triton. If you’re looking for software, he’s also selling a Windows-based “Triton AR Launcher” for the princely sum of $4.99 that looks pretty slick, but it’s absolutely not required to use the hardware.

Of course, plenty of people are more than happy to stick with the traditional keyboard and monitor setup. It’s hard to say if wearable displays and gesture interfaces will really become the norm, of they’re better left to science fiction. But either way, we’re happy to see affordable open source platforms for experimenting with this cutting edge technology. On the off chance any of them become the standard in the coming decades, we’d hate to be stuck in some inescapable walled garden because nobody developed any open alternatives.