PCB motors are useful things. With coils printed right on the board, you don’t need to worry about fussy winding jobs, and it’s possible to make very compact, self contained motors. [atomic14] has been doing some work in this area, and decided to explore why wedge coils perform better than round coils in PCB motor designs.

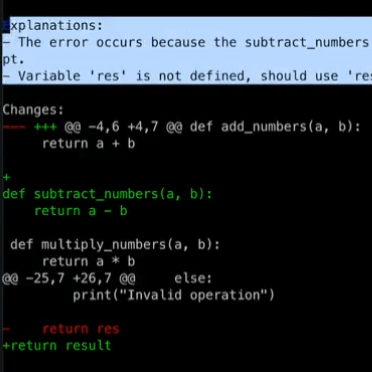

[atomic14]’s designs use four-layer PCBs which allow for more magnetic strength out of the coils made with traces. While they’ve tried a variety of designs, like most in this area, they used wedge-shaped coils to get the most torque out of their motors. As the video explains, the wedge layout allows a much greater packing efficiency, allowing the construction of coils with more turns in the same space. However, diving deeper, [atomic14] also uses Python code to simulate the field generated by the different-shaped coils. Most notably, it shows that the wedge design provides a significant increase in field strength in the relevant direction to make torque, which scales positively on motors with higher numbers of coils.

This kind of simulation and optimization is typical in industry. It’s great to see an explainer on real engineering methods on YouTube for everyone to enjoy. Video after the break.

Continue reading “What Makes Wedge Coils Better Than Round For PCB Motors?”