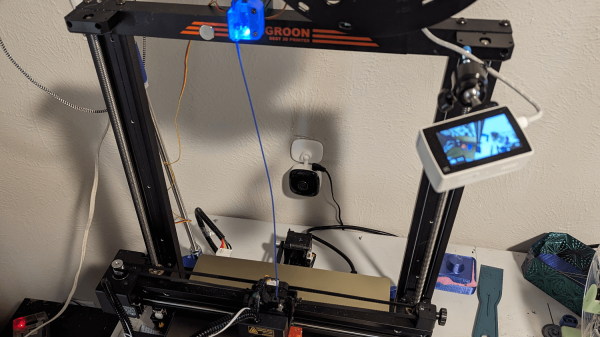

While 3D printer hardware has come along way in the past decade and a half, the real development has been in the software. Open source slicers are constantly improving, and OctoPrint can turn even the most basic of printers into a network-connected powerhouse. But despite all these improvements, there’s still certain combinations of hardware that require a bit of manual work.

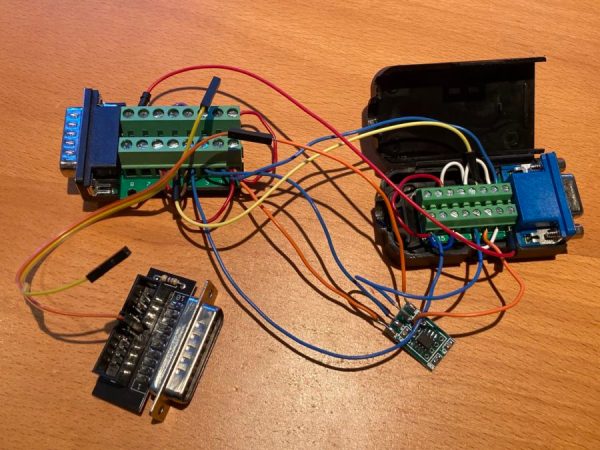

[Reticulated] wanted an easy way to monitor his prints over streaming video, but didn’t have any of the cameras that are supported by OctoPrint. Of course he could just point a cheap network-connected camera at the printer and be done with it, but he was looking for a bit better integration than that. In the process, he demonstrates how to unlock some features hidden in inexpensive webcams.

He set about building something that wouldn’t require buying more equipment or overloading the limited hardware responsible for the actual printing. A few of his existing cameras have RTMP support, which allows a fairly straightforward setup with YouTube Live once Monaserver is set up to handle the RTMP feeds from the cameras and OBS Studio is configured to stream it out to YouTube. Using the OctoPrint API, he was able to pull data such as the current extruder temperature and overlay it on the video.

One of the other interesting parts of this build is that not all of [Reticulated]’s cameras have built-in RTMP support but following this guide he was able to get more of them working with this setup than otherwise would have had this capability by default. Even beyond 3D printing, this is an excellent guide (and tip) for getting a quick live stream going for whatever reason. For anything more mobile than a working 3D printer, though, you might want to look at taking your streaming setup mobile instead.