For her Hackaday Prize entry, [ThunderSqueak] is building an artificial intelligence. P.A.L., the Self-Programming AI Robot, is building on the intelligence displayed by Amazon’s Alexa, Apple’s Siri, and whatever the Google thing is called, to build a robot that’s able to learn from its environment, track objects, judge distances, and perform simple tasks.

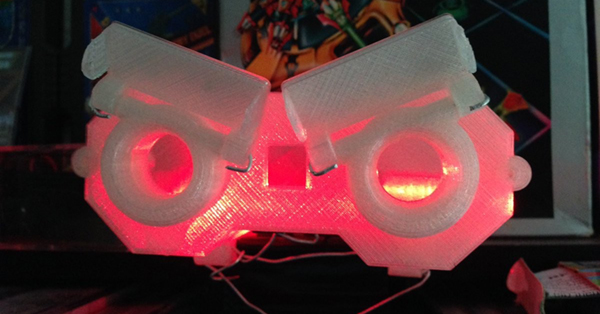

As with any robotic intelligence, the first question that comes to mind is, ‘what does it look like’. The answer here is, ‘a little bit like Johnny Five.’ [ThunderSqueak] has designed a robotic chassis using treads for locomotion and a head that can emote by moving its eyebrows. Those treads are not a trivial engineering task – the tracks are 3D printed and bolted onto a chain – and building them has been a very, very annoying part of the build.

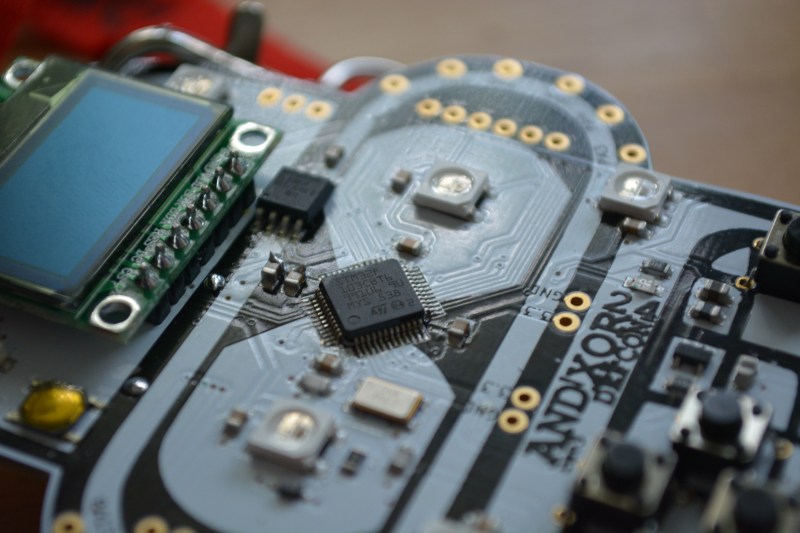

But no advanced intelligent robot is based on how it moves. The real trick here is the software, and for this [ThunderSqueak] has a few tricks up her sleeve. She’s doing voice recognition through a microcontroller, correlating phonemes to the spectral signature without using much power.

The purpose of P.A.L. isn’t to have a conversation with a robotic friend in a weird 80s escapade. The purpose of P.A.L. is to build a machine that can learn from its mistakes and learn just a little bit about its environment. This is where the really cool stuff happens in artificial intelligence and makes for an excellent entry for the Hackaday Prize.