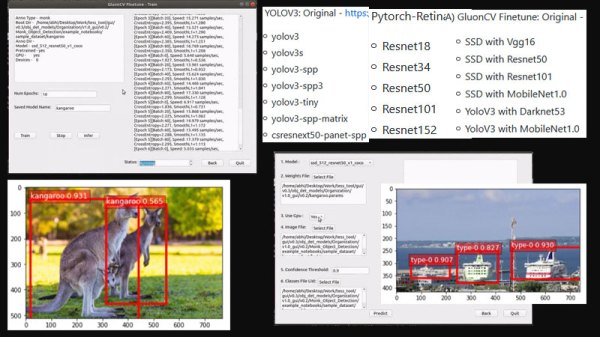

AI and Deep Learning for computer vision projects has come to the masses. This can be attributed partly to the community projects that help ease the pain for newbies. [Abhishek] contributes one such project called Monk AI which comes with a GUI for transfer learning.

Monk AI is essentially a wrapper for Computer Vision and deep learning experiments. It facilitates users to finetune deep neural networks using transfer learning and is written in Python. Out of the box, it supports Keras and Pytorch and it comes with a few lines of code; you can get started with your very first AI experiment.

Monk AI is essentially a wrapper for Computer Vision and deep learning experiments. It facilitates users to finetune deep neural networks using transfer learning and is written in Python. Out of the box, it supports Keras and Pytorch and it comes with a few lines of code; you can get started with your very first AI experiment.

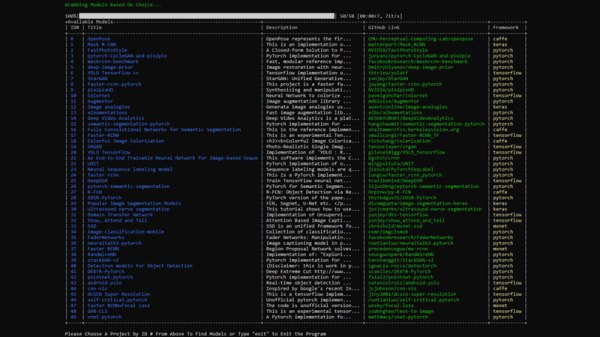

[Abhishek] also has an Object Detection wrapper(GitHub) that has some useful examples as well as a Monk GUI(GitHub) tool that looks similar to the tools available in commercial packages for running, training and inference experiments.

The documentation is a work in progress though it seems like an excellent concept to build on. We need more tools like these to help more people getting started with Deep Learning. Hardware such as the Nvidia Jetson Nano and Google Coral are affordable and facilitate the learning and experimentation.