Having a dual- or quad-core CPU is not very exotic these days and CPUs with 12 or even 16 cores aren’t that rare. The Andromeda from Cerebras is a supercomputer with 13.5 million cores. The company claims it is one of the largest AI supercomputers ever built (but not the largest) and can perform 120 Petaflops of “dense compute.”

We aren’t sure about the methodology, but they also claim more than one exaflop of “AI computing.” The computer has a fabric backplane that can handle 96.8 terabits per second between nodes. According to a post on Extreme Tech, the core technology is a 3-plane wafer processor, WSE-2. One plane is for communications, one holds 40 GB of static RAM, and the math plane has 850,000 independent cores and 3.4 million floating point units.

The data is sent to the cores and collected by a bank of 64-core AMD EPYC 3 processors. Andromeda is optimized to handle sparse matrix computations. The company claims that the performance scales “almost linearly.” That is, as you double the number of cores used, you roughly half the total run time.

The machine is available for remote use and cost about $35 million to build. Since it uses 500 kW at peak run times, it isn’t free to operate, either. Extreme Tech notes that the Frontier computer at Oak Ridge National Labs is both larger and more precise, but it cost $600 million, so you’d expect it to be more capable.

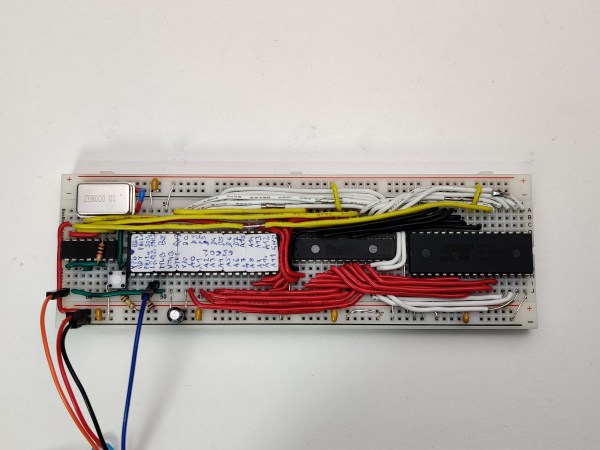

Most homebrew “supercomputers” we see are more for learning how to work with clusters than trying to hit this sort of performance. Of course, if you have a modern graphics card, OpenCL and CUDA will let you do some of this, too, but at a much lesser scale.