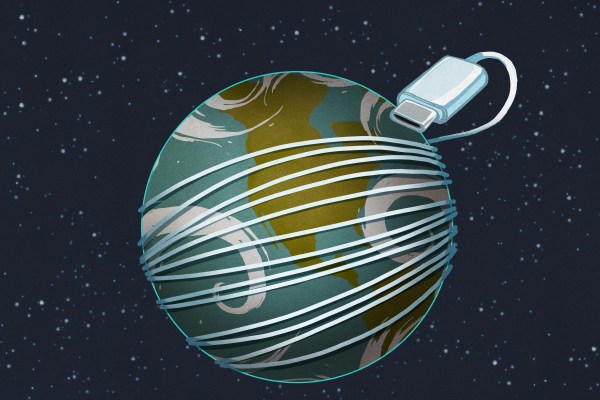

It is easy to forget that many technology juggernauts weren’t always the only game in town. Ethernet seems ubiquitous today, but it had to fight past several competing standards. VHS and Blu-ray beat out their respective competitors. But what about USB? Sure, it was off to a rocky start in the beginning, but what was the real competition at that time? SCSI? Firewire? While those had plusses and minuses, neither were really in a position to fill the gap that USB would inhabit. But [Ernie Smith] remembers ACCESS.bus (or, sometimes, A.b) — what you might be using today if USB hadn’t taken over the world.

Back in the mid-1980s, there were several competing serial bus systems including Apple Desktop Bus and some other brand-specific things from companies like Commodore (the IEC bus) and Atari (SIO). The problem is that all of these things belong to one company. If you wanted to make, say, keyboards, this was terrible. Your Apple keyboard didn’t fit your Atari or your IBM computer. But there was a very robust serial protocol already in use — one you’ve probably used yourself. IIC or I2C (depending on who you ask).