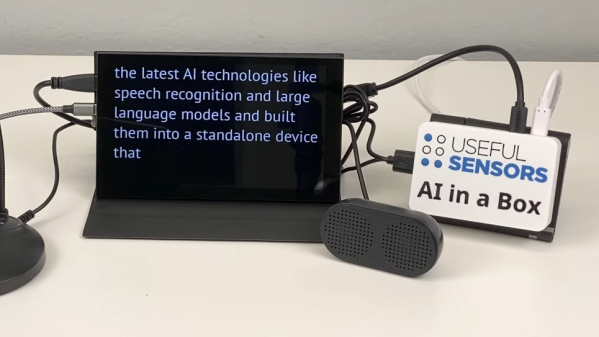

LLMs (Large Language Models) for local use are usually distributed as a set of weights in a multi-gigabyte file. These cannot be directly used on their own, which generally makes them harder to distribute and run compared to other software. A given model can also have undergone changes and tweaks, leading to different results if different versions are used.

To help with that, Mozilla’s innovation group have released llamafile, an open source method of turning a set of weights into a single binary that runs on six different OSes (macOS, Windows, Linux, FreeBSD, OpenBSD, and NetBSD) without needing to be installed. This makes it dramatically easier to distribute and run LLMs, as well as ensuring that a particular version of LLM remains consistent and reproducible, forever.

This wouldn’t be possible without the work of [Justine Tunney], creator of Cosmopolitan, a build-once-run-anywhere framework. The other main part is llama.cpp, and we’ve covered why it is such a big deal when it comes to running self-hosted LLMs.

There are some sample binaries available using the Mistral-7B, WizardCoder-Python-13B, and LLaVA 1.5 LLMs. Just keep in mind that if you’re on a Windows platform, only the LLaVA 1.5 will run, because it’s the only one that squeaks under the 4 GB limit on executable files that Windows has. If you run into issues, check out the gotchas list for troubleshooting tips.