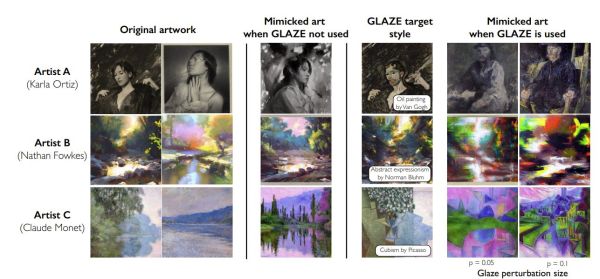

With the rise of machine-generated art we have also seen a major discussion begin about the ethics of using existing, human-made art to train these art models. Their defenders will often claim that the original art cannot be reproduced by the generator, but this is belied by the fact that one possible query to these generators is to produce art in the style of a specific artist. This is where feature extraction comes into play, and the Glaze tool as a potential obfuscation tool.

Developed by researchers at the University of Chicago, the theory behind this tool is covered in their preprint paper. The essential concept is that an artist can pick a target ‘cloak style’, which is used by Glaze to calculate specific perturbations which are added to the original image. These perturbations are not easily detected by the human eye, but will be picked up by the feature extraction algorithms of current machine-generated art models. Continue reading “Modifying Artwork With Glaze To Interfere With Art Generating Algorithms”