People keep warning that Skynet and the great robot uprising is not that far away, what with all this recent AI and machine-learning malarky getting all the attention lately. But we think going straight for a terminator robot army is not a very smart approach, not least due to a lack of subtlety. We think that it’s a much better bet to take over the world one home appliance at a time, and this AI Powered coffee maker might just well be part of that master plan.

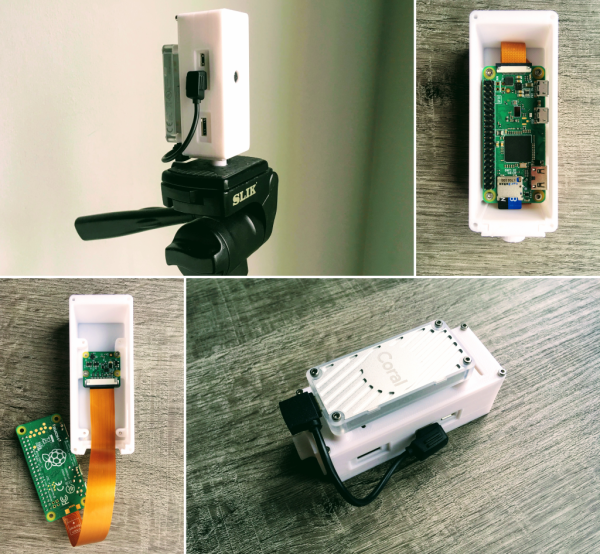

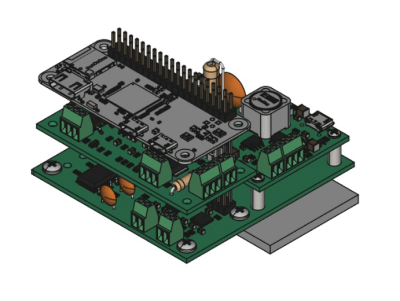

[Mark Smith] has taken a standard semi-auto espresso maker and jazzed it up a bit, with a sweet bar graph nixie tube the only obvious addition, at least from the front of the unit. Inside, a Raspberry Pi Zero sits atop his own nixie tube hat and associated power supply. The whole assembly is dropped into a 3D printed case and lives snuggled up to the water pump.

The Pi is running a web application written with the excellent Flask framework, and also an additional control application written in python. This allows the user to connect to the machine via Ethernet and see its status. The smarts are in the form of a simple self-grading machine learning algorithm, that takes a time series as an input (in this case when you take your shots of espresso) and after a few weeks of data, is able to make a reasonable prediction as to when you might want it in the future. It then automatically heats up in time for you to use the machine, when you usually do, then cools back down to save energy. No more pointless wandering around to see if the machine is hot enough yet – as you can just check the web page and see from the comfort of your desk.

But that’s not all [Mark] has done. He also improved the temperature control of the water boiler, and added an interlock that prevents the machine from producing a shot until the water temperature is just so. Water level is indicated by the glorious bar graph nixie tube, which also serves a few other user indication duties when appropriate. All in all a pretty sweet build, but we do add a word of caution: If your toaster starts making an unreasonable number of offers of toasted teacakes, give it a wide berth.