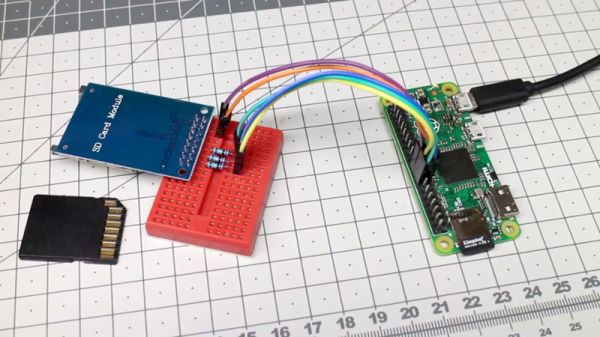

The Raspberry Pi Zero is a beautiful piece of hardware, fitting an entire Linux computer into a package the size of a pack of gum (don’t chew it, though). However, this size comes with limited IO options, which can be a complication for some projects. In this case, [Hugatry] wanted extra storage, and devised a smart method to add a second SD card to the Pi Zero.

The problem with the Pi Zero is that with only a single USB port, it’s difficult to add any other storage to the device without making things bulkier with hubs or other work arounds. Additionally, the main SD card can’t be removed while the Pi is running, so it makes sense to add an easy-to-use removable storage option to the Pi Zero.

It’s quite a simple hack – all that’s required to pull it off is a few resistors, an SD card connector, and some jumper wires. With everything hooked up, a small configuration change enables the operating system to recognise the new card.

Overall it’s great to see hacks that add further functionality to an already great platform. If you find it’s not powerful enough, you can always try overclocking one.