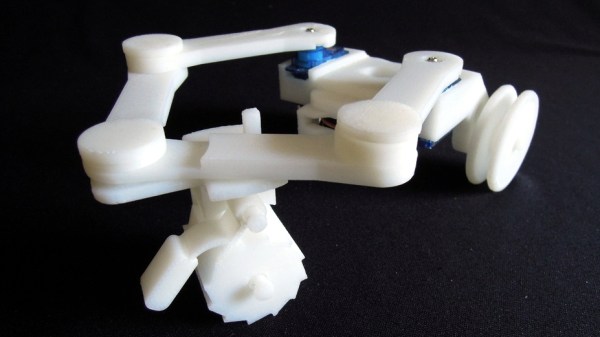

After you’ve taken a moment to ponder the turn of phrase used in the title, take a look at this scratch-built robotic vacuum created by [theking3737]. The entire body of the vacuum was 3D printed, and all of the internal electronics are off-the-shelf modular components. We can’t say how well it stacks up against the commercial equivalents from iRobot and the like, but it doesn’t look like it would be too hard to build one yourself to find out.

The body of this rather concerned-looking robot was printed on a DMS DP5 printer, which is a neat trick as it only has a build platform of 200 mm x 200 mm. Once all the pieces were printed, a 3D pen was used to “weld” the sections together. The final result looks a bit rough, but should give a bond that’s just as strong as the printed parts themselves.

The body of this rather concerned-looking robot was printed on a DMS DP5 printer, which is a neat trick as it only has a build platform of 200 mm x 200 mm. Once all the pieces were printed, a 3D pen was used to “weld” the sections together. The final result looks a bit rough, but should give a bond that’s just as strong as the printed parts themselves.

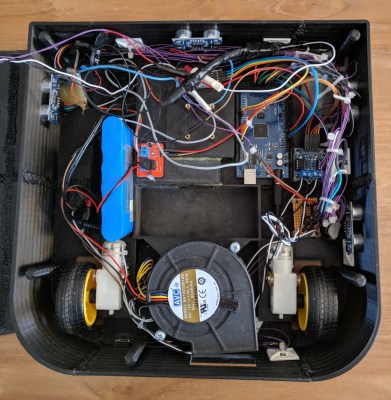

The robot has four sets of ultrasonic range finders to detect walls and obstacles, though probably not in the positions you would expect. The right side of the robot has two sets of sensors, while the left side only gets one. We aren’t sure the reasoning behind the asymmetrical layout, but presumably the machine prefers making right turns.

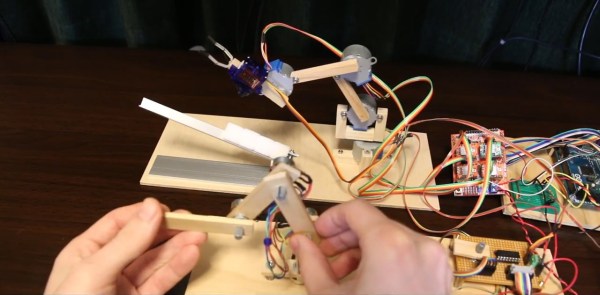

Control is provided by an Arduino Mega and the ever-reliable HC-05 Bluetooth module. A companion Android application was written which allows configuring the robot without having to plug into the Arduino every time you want to tweak a setting.

We can’t say we’ve seen that many DIY robotic vacuums here at Hackaday, but we’ve certainly featured our fair share of hacks for the commercially available models.

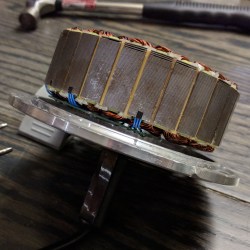

[madcowswe] starts by pointing out that the entire premise of ODrive (an open-source brushless motor driver board) is to make use of inexpensive brushless motors in industrial-type applications. This usually means using hobby electric aircraft motors, but robotic applications sometimes need more torque than those motors can provide. Adding a gearbox is one option, but there is another: so-called “hoverboard” motors are common and

[madcowswe] starts by pointing out that the entire premise of ODrive (an open-source brushless motor driver board) is to make use of inexpensive brushless motors in industrial-type applications. This usually means using hobby electric aircraft motors, but robotic applications sometimes need more torque than those motors can provide. Adding a gearbox is one option, but there is another: so-called “hoverboard” motors are common and