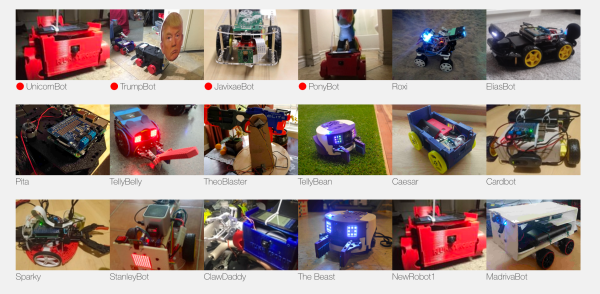

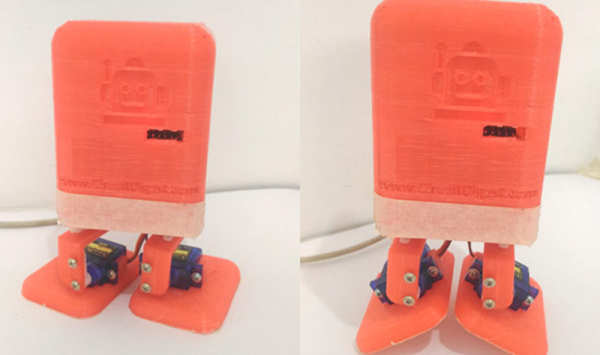

Since the beginning of the Internet people have been controlling robots over it, peering at grainy gifs of faraway rec rooms as the robot trundles around. RunMyRobot.com has taken that idea and brought it fully into the teens. These robots use wifi or mobile connections, are 3D printed, and run Python.

The site aims to provide everything to anyone who wants to participate. If you’re just an anonymous visitor, you can still play with the robots, but anyone can also play with the same one, and sometimes a whole bunch of visitors create a cacophony of commands that makes it not fun—but you can always move to a different robot. Logged-in members of the site have the option to take over a robot and not allow anyone else to use it.

If you want to build a robot and add it to the site, the creators show how to do that as well, with a Github code repository and 3D-printable designs available for download, as well as YouTube instructions on how to build either the printed robot or one made with off-the shelf parts. They’re also looking for patrons to help with development, with the first item on their list being a mobile app.

Thanks to [Sim] for the link.