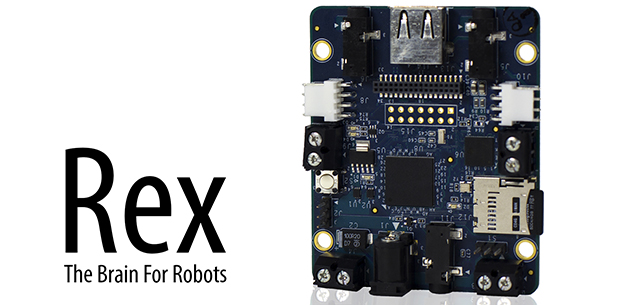

There are a million tutorials out there for building a robot with an Arduino or Raspberry Pi, but they all suffer from the same problem: neither the ‘duino nor the Raspi are fully integrated solutions that put all the hardware – battery connectors, I/O ports, and everything else on the same board. That’s the problem Rex, an ARM-powered robot controller, solves.

The specs for Rex include a 1GHz ARM Cortex-A8 with a Video SoC and DSP core, 512 MB of RAM, USB host port, support for a camera module, and 3.5mm jacks for stereo in and out. On top of that, there’s I2C expansion ports for a servo adapter and an input and output for a 6-12 V battery. Basically, the Rex is something akin to the Beaglebone Black with the hardware optimized for a robotic control system.

Because shipping an ARM board without any software would be rather dull, the guys behind Rex came up with Alphalem OS, a Linux distro that includes scripts, sample programs, and an API for interaction with I2C devices. Of course Rex will also run other robotics operating systems and the usual Debian/Ubuntu/Whathaveu distros.

It’s an impressive bit of hardware, capable of speech recognition, and machine vision tasks with OpenCV. Combine this with a whole bunch of servos, and Rex can easily become the brains of a nightmarish hexapod robot that responds to your voice and follows you around the room.

You can pick up a Rex over on the Kickstarter with delivery due sometime this summer.

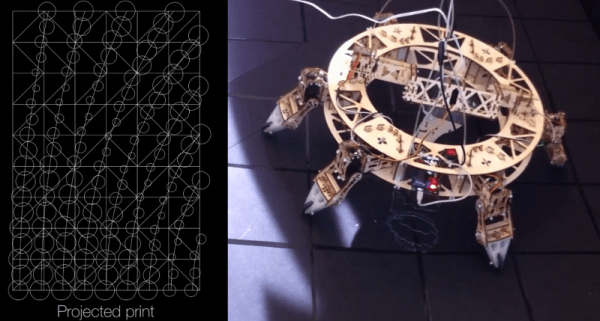

[Trandi] can check ‘build a self-balancing robot’ off of his to-do list. Over a couple of weekends,

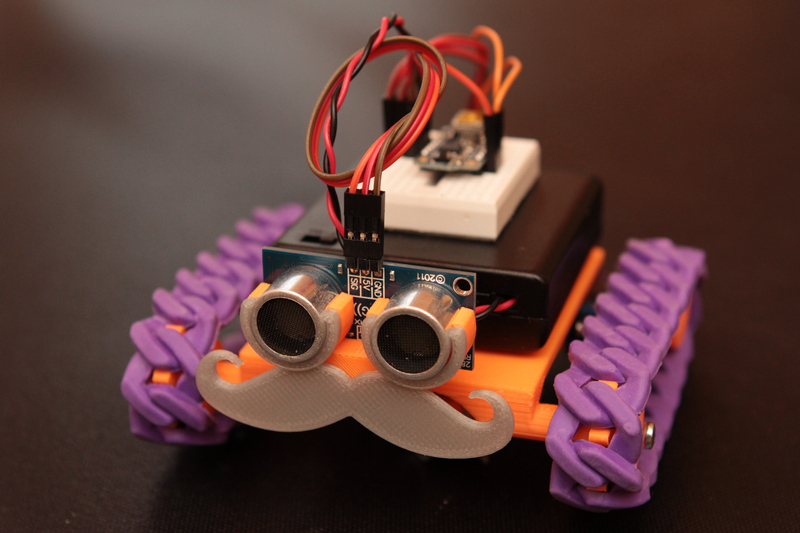

[Trandi] can check ‘build a self-balancing robot’ off of his to-do list. Over a couple of weekends,  [Rick], an Adafruit learning system contributor, is excited by the implications of STEM’s reach into K-12 education. He was inspired to design

[Rick], an Adafruit learning system contributor, is excited by the implications of STEM’s reach into K-12 education. He was inspired to design

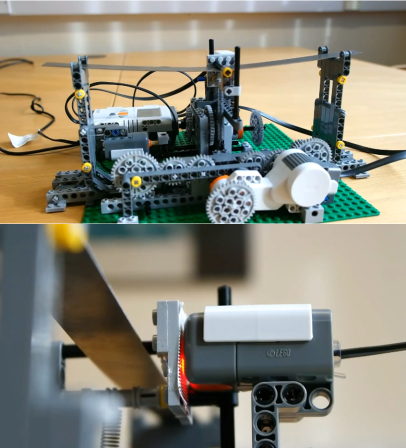

Learning with visuals can be very helpful. Learning with models made from NXT Mindstorms is just plain awesome, as [Rdsprm] demonstrates with this

Learning with visuals can be very helpful. Learning with models made from NXT Mindstorms is just plain awesome, as [Rdsprm] demonstrates with this