[Raymond Ma] has a penchant for browsing Aliexpress and purchasing curious pieces of hardware that are as high on promises as they are low on cost. This is a process he aptly sums up with his opening line of “I should have known better, but…” Luckily, these devices all get torn down and analyzed so we can each enjoy and share a little slice of disappointment.

One such item is the $30 USD YT200 mini projector, which at 320×180 is almost as low on pixels as it is on cost. Still, [Raymond] looks inside to find out if there is perhaps more hacking potential than there is image resolution.

One such item is the $30 USD YT200 mini projector, which at 320×180 is almost as low on pixels as it is on cost. Still, [Raymond] looks inside to find out if there is perhaps more hacking potential than there is image resolution.

The YT200 lacks any kind of normal video input, and the anemic 15 lumen output is brazenly branded as a feature to protect children’s eyes from excessive brightness.

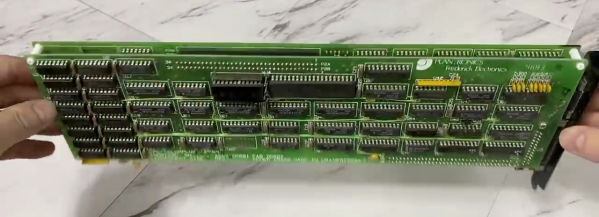

Light from the single LED is collimated with some Fresnel lenses. That light passes through an LCD panel, and from there the image bounces off a mirror and through a focusing lens housed in a spiral guide. Focal adjustments are made with a small lever, and the whole assembly provides just enough friction to prevent the lens from moving out of focus on its own.

The device actually does work fairly well for what it is: a way to play a range of different media types off a connected USB storage device. As long as one is in a dark room, anyway.

[Raymond] hopes to find some alternate use for the device. Might we suggest projecting into a frosted glass globe to create a sort of spherical display? A spooky eye animation on a USB stick might pair well with that.