You could have said this at any time in the last couple of decades: the world of virtual reality peripherals does not yet feel as though it has fulfilled its potential. From the Amiga-powered Virtuality headsets and nausea-inducing Nintendo Virtual Boy of the 1990s to today’s crop of advanced headsets and peripherals, there has always been a sense that we’re not quite there yet. Moments at which the shortcomings of the hardware intrude into the virtual world may be less frequent with the latest products, but still the goal of virtual world immersion seems elusive at times.

One of the more interesting peripherals on the market today is the Leap Motion controller. This is a USB device containing infra-red illumination and cameras which provide enough resolution for its software to accurately calculate the position of a user’s hands and fingers in three-dimensional space. This ability to track finger movement gives it the function of a controller for really complex interactions with and manipulations of objects in virtual worlds.

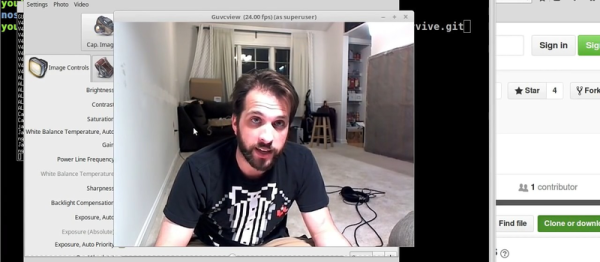

Even the Leap Motion has its shortcomings though, moments at which it ceases to be able to track. Rotating your hand, as you might for instance when aiming a virtual in-game weapon, confuses it. This led [Florian Maurer] to seek his own solution, and he’s come up with a hand peripheral containing a rotation sensor.

Inspired by a movie prop from the film Ender’s Game, it is a 3D-printed device that clips onto the palm of his hand between thumb and index finger. It contains both an Arduino Pro Micro and a bno055 rotation sensor, plus a couple of buttons for in-game actions such as triggers. It solves the problem with the Leap Motion’s rotation detection, and does not impede hand movement so much that he can’t also use his keyboard and mouse while wearing it. Sadly he does not yet seem to have posted any code, but he does treat us to a video demonstration which we’ve posted below the break.

Continue reading “VR Feels More Real With Leap Motion And This Rotation Sensor” →