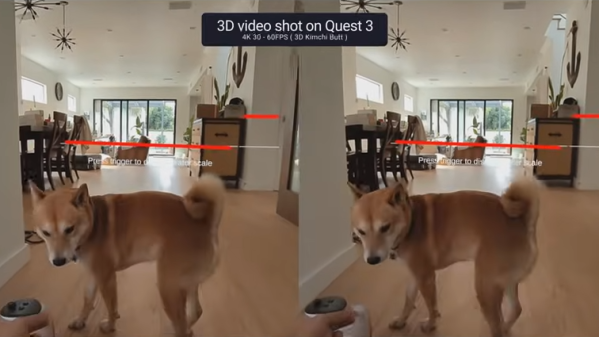

The Quest 3 VR headset is an impressive piece of hardware. It is also not open; not in the way most of us understand the word. One consequence of this is the inability in general for developers or users to directly access the feed of the two color cameras on the front of the headset. However, [Hugh Hou] shares a method of doing exactly this to capture 3D video on the Quest 3 headset for later playback on different devices.

There are a few steps to the process and it involves enabling developer mode on the hardware then using ADB (Android Debug Bridge) commands to enable the necessary functionality, but it’s nothing the average curious hacker can’t handle. The directions are written out in the video’s description, along with a few handy links. (The video is embedded below just under the page break, but view it on YouTube to access the description and all the info in it.)

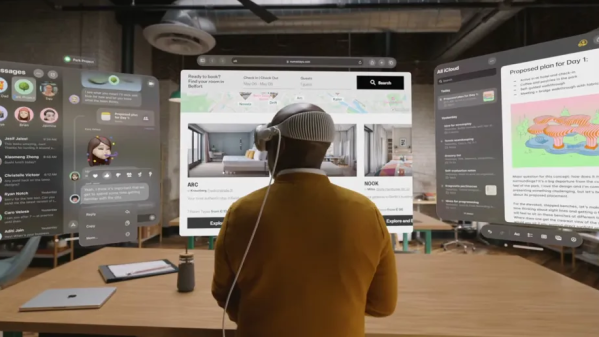

He also provides some excellent guidance on practical things like how to capture stable shots, editing the videos, and injecting the necessary metadata for optimal playback on different platforms, including hassle-free uploading to a service like YouTube. [Hugh] is no stranger to this kind of video and camera handling and really knows his stuff, and it’s great to see someone provide detailed instructions.

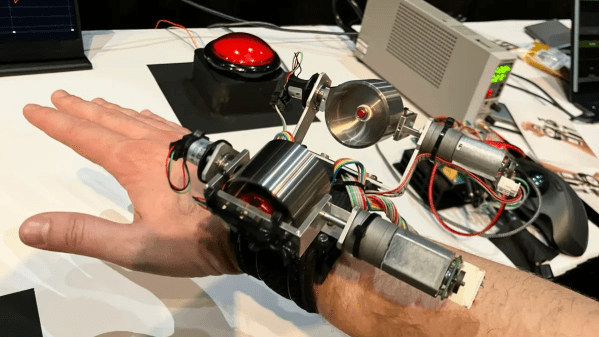

This kind of 3D video comes down to recording two different views, one for each eye. There’s another way to approach 3D video, however: light fields are also within reach of enterprising hackers, and while they need more hardware they yield far more compelling results.

Continue reading “Quest 3 VR Headset Can Capture 3D Video (Some Tampering Required)”