This is a pretty intricate camera mount. Not only does it provide pan and tilt as the subtitles state, but it moves along a track and offers zoom and focus controls. Its great, but you’ll need an equally complex set of controls to do anything meaningful with it. That’s where the real hack comes into play. The entire system is controlled by its virtual model in Blender 3D.

You probably already know that Blender 3D is an open source 3-dimensional modeling suite. It’s got a mountain of features, which include a framework for animating virtual objects. The camera rig was replicated inside of the software, and includes a skeleton that moves just like the real thing. You can make an animation of how the camera should move, then export and play back those motions on the physical hardware.

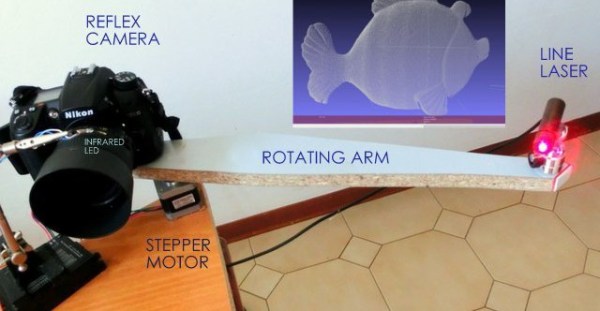

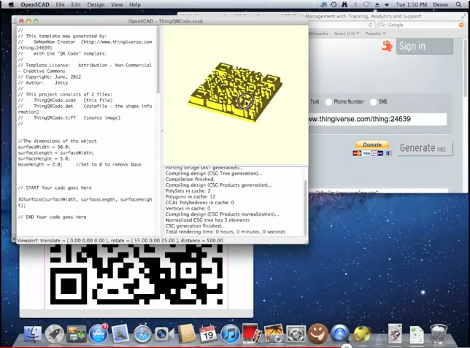

Now if you need help making 3D models of your hardware perhaps you should try scanning them.

Continue reading “Complex Camera Rig Controlled With Blender 3D”