Apple has developed a proprietary — even mysterious — “fisheye” projection format used for their immersive videos, such as those played back by the Apple Vision Pro. What’s the mystery? The fact that they stream their immersive content in this format but have provided no elaboration, no details, and no method for anyone else to produce or play back this format. It’s a completely undocumented format and Apple’s silence is deafening when it comes to requests for, well, anything to do with it whatsoever.

Probably those details are eventually forthcoming, but [Mike Swanson] isn’t satisfied to wait. He’s done his own digging into the format and while he hasn’t figured it out completely, he has learned quite a bit and written it all up on a blog post. Apple’s immersive videos have a lot in common with VR180 type videos, but under the hood there is more going on. Apple’s stream is DRM-protected, but there’s an unencrypted intro clip with logo that is streamed in the clear, and that’s what [Mike] has been focusing on.

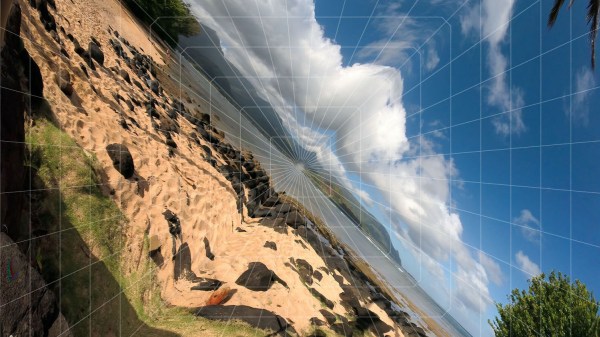

[Mike] has been able to determine that the format definitely differs from existing fisheye formats recorded by immersive cameras. First of all, the content is rotated 45 degrees. This spreads the horizon of the video across the diagonal, maximizing the number of pixels available in that direction (a trick that calls to mind the heads in home video recorders being tilted to increase the area of tape it can “see” beyond the physical width of the tape itself.) Doing this also spreads the center-vertical axis of the content across the other diagonal, with the same effect.

There’s more to it than just a 45-degree rotation, however. The rest most closely resembles radial stretching, a form of disc-to-square mapping. It’s close, but [Mike] can’t quite find a complete match for what exactly Apple is doing. Probably we’ll all learn more soon, but for now Apple isn’t saying much.

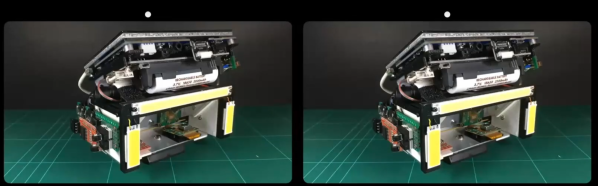

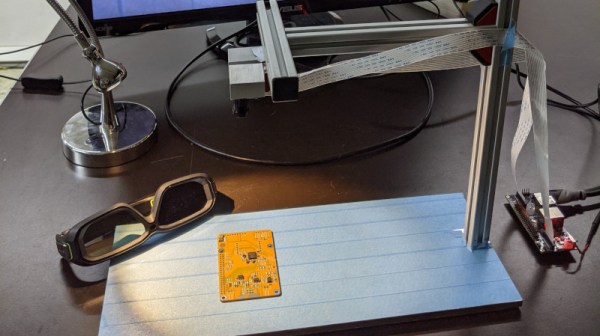

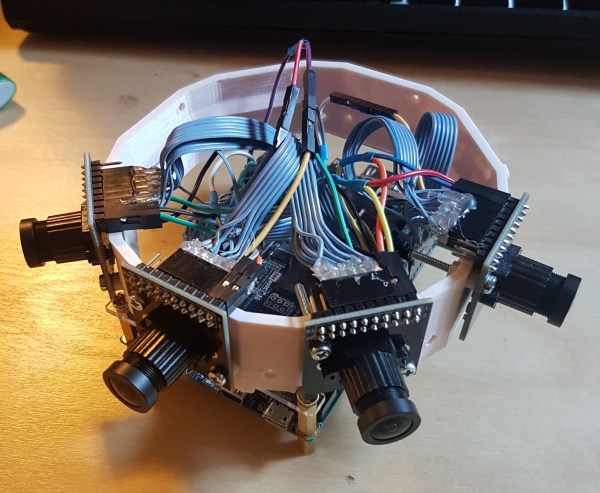

Videos like VR180 videos and Apple’s immersive format display stereoscopic video that allow a user to look around naturally in a scene. But to really deliver a deeper sense of presence and depth takes light fields.