These days, just about anyone with a pulse can fall on a keyboard and make an AI image generator spurt out some kind of vaguely visual content. A lot of it is crap. Some of it’s confusing. But most of all, creators hate it when their hand-crafted works are compared with these digital extrusions from mathematical slop. Enter the “not by AI” badge.

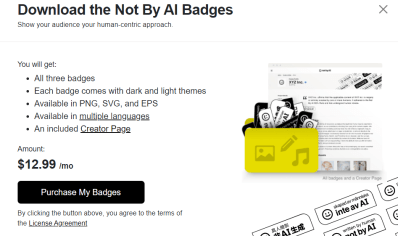

Basically, it’s exactly what it sounds like. A sleek, modern badge that you slap on your artwork to tell people that you did this, not an AI. There are pre-baked versions for writers (“written by human”), visual artists (“painted by human”), and musicians (“produced by human”). The idea is that these badges would help people identify human-generated content and steer away from AI content if they’re trying to avoid it.

It’s not just intended to be added to individual artworks. Websites that have “at least 90%” of content created by humans are invited to host the badge, along with apps, too. This directive reveals an immediate flaw—the badge would easily confuse someone if they read the 10% of content by AI on a site wearing the badge. There’s also nothing stopping people from slapping the badge on AI-generated content and simply lying to people.

You might take a more cynical view if you dig deeper, though. The company is charging for various things, such as a monthly fee for businesses that want to display the badges.

We’ve talked about this before when we asked a simple question—how do you convince people your artwork was made by a human? We’re not sure we’ve yet found the answer, but this badge program is at least trying to do something about the issue. Share your human thoughts in the comments below.