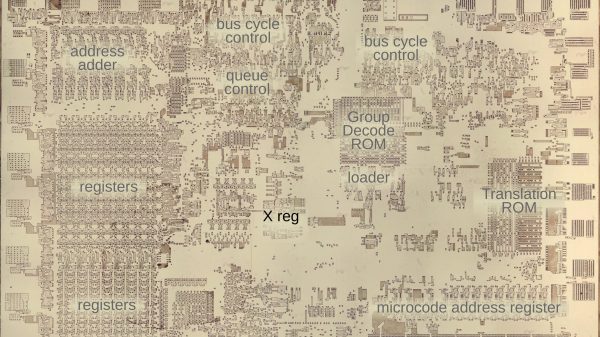

What do the HP-1000 and the DEC VAX 11/730 have in common with the video games Tempest and Battlezone? More than you might think. All of those machines, along with many others from that time period, used AM2900-family bit slice CPUs.

The bit slice CPU was a very successful product that could only have existed in the 1970s. Today, if you need a computer system, there are many CPUs and even entire systems on a chip to choose from. You can also get many small board-level systems that would probably do anything you want. In the 1960s, you had no choices at all. You built circuit boards with gates on the using transistors, tubes, relays, or — maybe — small-scale IC gates. Then you wired the boards up.

It didn’t take a genius to realize that it would be great to offer people a CPU chip like you can get today. The problem is the semiconductor technology of the day wouldn’t allow it — at least, not with any significant amount of resources. For example, the Motorola MC14500B from 1977 was a one-bit microprocessor, and while that had its uses, it wasn’t for everyone or everything.

The Answer

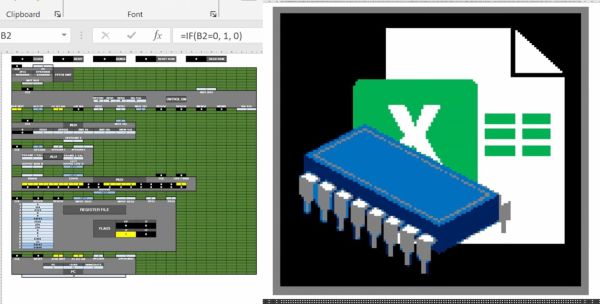

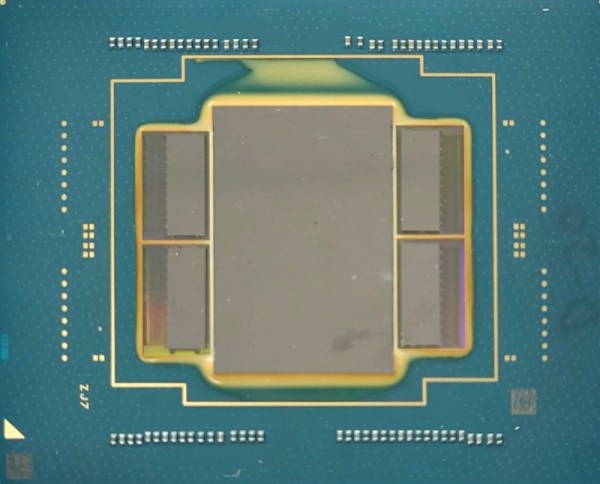

The answer was to produce as much of a CPU as possible in a chip and make provisions to use multiple chips together to build the CPU. That’s exactly what AMD did with the AM2900 family. If you think about it, what is a CPU? Sure, there are variations, but at the core, there’s a place to store instructions, a place to store data, some way to pick instructions, and a way to operate on data (like an ALU — arithmetic logic unit). Instructions move data from one place to another and set the state of things like I/O devices, ALU operations, and the like.