Just as the gold standard for multimeters and other instrumentation likely comes in a yellow package of some sort, there is a similar household name for thermal imaging. But, if they’re known for anything other than the highest quality thermal cameras, it’s excessively high price. There are other options around but if you want to make sure that the finished product has some sort of quality control you might want to consider building your own thermal imaging device like [Ruslan] has done here.

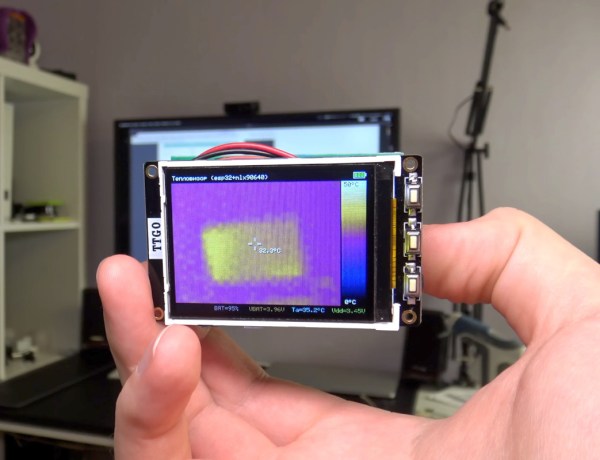

The pocket-sized thermal camera is built around a MLX90640 sensor from Melexis which can be obtained on its own, but can also be paired with an STM32F446 board with a USB connection in order to easily connect it to a computer. For that, [Ruslan] paired it with an ESP32 board with a companion screen, so that the entire package could be assembled together with a battery and still maintain its sleek shape. The data coming from the thermal imagining sensor does need some post-processing in order to display useful images, but this is well within the capabilities of the STM32 and ESP32.

With an operating time on battery of over eight hours and a weight under 100 grams, this could be just the thing for someone looking for a thermal camera who doesn’t want to give up an arm and a leg to one of the industry giants. If you’re looking for something even simpler, we’ve seen a thermal camera based on a Raspberry Pi that delivers its images over the network instead of on its own screen.