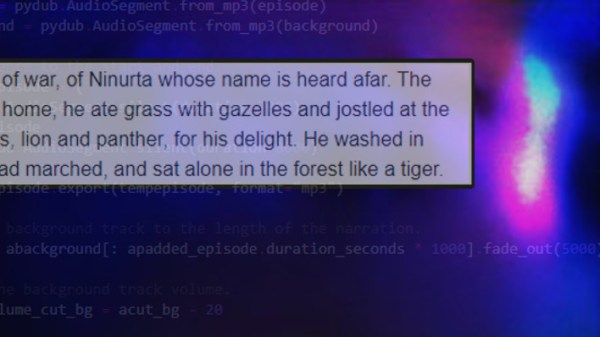

Want your next project to trash talk? Dynamically rewrite boring log messages as sci-fi technobabble? Happily (or grudgingly) answer questions? Doing that sort of thing and more can be done with OpenAI’s GPT-3, a natural language prediction model with an API that is probably a lot easier to use than you might think.

In fact, if you have basic Python coding skills, or even just the ability to craft a curl statement, you have just about everything you need to add this ability to your next project. It’s not free in the long run, although initial use is free on signup, but for personal projects the costs will be very small.

Basic Concepts

OpenAI has an API that provides access to GPT-3, a machine learning model with the ability to perform just about any task that involves understanding or generating natural-sounding language.

OpenAI provides some excellent documentation as well as a web tool through which one can experiment interactively. First, however, one must create an account and receive an API key. After that is done, the doors are open.

Creating an account also gives one a number of free credits that can be used to experiment with ideas. Once the free trial is used up or expires, using the API will cost money. How much? Not a lot, frankly. Everything sent to (and received from) the API is broken into tokens, and pricing is from $0.0008 to $0.06 per thousand tokens. A thousand tokens is roughly 750 words, so small projects are really not a big financial commitment. My free trial came with 18 USD of credits, of which I have so far barely managed to spend 5%.

Let’s take a closer look at how it works, and what can be done with it!

Continue reading “Natural Language AI In Your Next Project? It’s Easier Than You Think”