The old way was to write clever code that could handle every possible outcome. But what if you don’t know exactly what your inputs will look like, or just need a faster route to the final results? The answer is Machine Learning, and we want you to give it a try during the Train All the Things contest!

It’s hard to find a more buzz-worthy term than Artificial Intelligence. Right now, where the rubber hits the road in AI is Machine Learning and it’s never been so easy to get your feet wet in this realm.

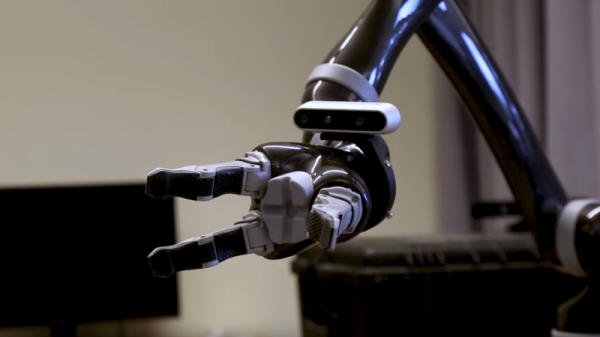

From an 8-bit microcontroller to common single-board computers, you can do cool things like object recognition or color classification quite easily. Grab a beefier processor, dedicated ASIC, or lean heavily into the power of the cloud and you can do much more, like facial identification and gesture recognition. But the sky’s the limit. A big part of this contest is that we want everyone to get inspired by what you manage to pull off.

Yes, We Do Want to See Your ML “Hello World” Too!

Wait, wait, come back here. Have we already scared you off? Don’t read AI or ML and assume it’s not for you. We’ve included a category for “Artificial Intelligence Blinky” — your first attempt at doing something cool.

Need something simple to get you excited? How about Machine Learning on an ATtiny85 to sort Skittles candy by color? That uses just one color sensor for a quick and easy way to harvest data that forms a training set. But you could also climb up the ladder just a bit and make yourself a camera-based LEGO sorter or using an IMU in a magic wand to detect which spell you’re casting. Need more scientific inspiration? We’re hoping someday someone will build a training set that classifies microscope shots of micrometeorites. But we’d be equally excited with projects that tackle robot locomotion, natural language, and all the other wild ideas you can come up with.

Our guess is you don’t really need prizes to get excited about this one… most people have been itching for a reason to try out machine learning for quite some time. But we do have $100 Tindie gift certificates for the most interesting entry in each of the four contest categories: ML on the edge, ML on the gateway, AI blinky, and ML in the cloud.

Get started on your entry. The Train All The Things contest is sponsored by Digi-Key and runs until April 7th.