Consider the complexity of the appendages sitting at the end of your arms. The human hands contain over a quarter of the entire complement of bones in the body, use dozens of muscles both in the hand itself and extending up the forearm, and are capable of almost infinite variance in the movements they can create. They are exquisite machines.

And yet when it comes to virtual reality, most simulations treat the hands like inert blobs. That may be partly due to their complexity; doing motion capture from so many joints can be computationally challenging. But this pressure-sensitive hand motion capture rig aims to change that. The product of an undergraduate project by [Leslie], [Hunter], and [Matthew], the idea was to provide an economical and effective way to capture gestures for virtual reality simulators, which generally focus on capturing large motions from the whole body.

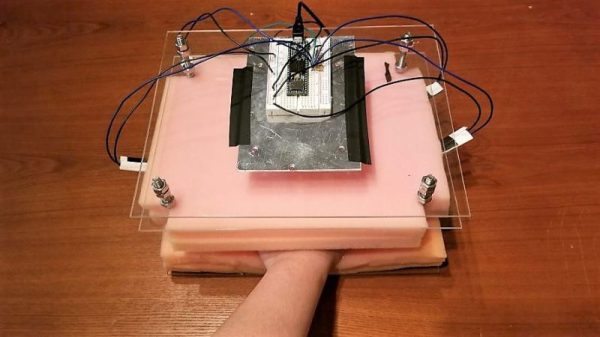

The sensor consists of a sandwich of polyurethane foam with strain gauge sensors embedded within. The user slips his or her hand into the foam and rests the fingers on the sensors. A Teensy and twenty lines of code translate finger motions within the sandwich into five axes of joystick movement, which is then sent to Unreal Engine, where finger motions were translated to a 3D-model of a hand to play a VR game of “Rock, Paper, Scissors.”

[Leslie] and her colleagues have a way to go on this; testers complained that the flat hand posture was unnatural, and that the foam heated things up quickly. Maybe something more along the lines of these gesture-capturing gloves would work?

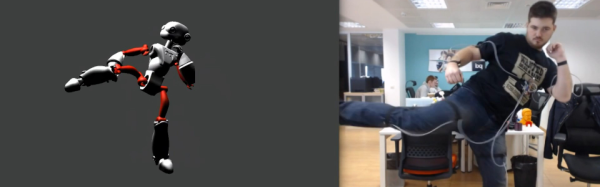

Fifteen sensor boards, called K-Ceptors, are attached to various points on the body, each containing an LSM9DS1 IMU (Inertial Measurement Unit). The K-Ceptors are wired together while still allowing plenty of freedom to move around. Communication is via I2C to a Raspberry Pi. The Pi then sends the collected data over WiFi to a desktop machine. As you move around, a 3D model of a human figure follows in realtime, displayed on the desktop’s screen using Blender, a popular, free 3D modeling software. Of course, you can do something else with the data if you want, perhaps make a robot move? Check out the overview and the performance by a clearly experienced dancer putting the system through its paces in the video below.

Fifteen sensor boards, called K-Ceptors, are attached to various points on the body, each containing an LSM9DS1 IMU (Inertial Measurement Unit). The K-Ceptors are wired together while still allowing plenty of freedom to move around. Communication is via I2C to a Raspberry Pi. The Pi then sends the collected data over WiFi to a desktop machine. As you move around, a 3D model of a human figure follows in realtime, displayed on the desktop’s screen using Blender, a popular, free 3D modeling software. Of course, you can do something else with the data if you want, perhaps make a robot move? Check out the overview and the performance by a clearly experienced dancer putting the system through its paces in the video below.

In fairness, what [Mark Rober] started three years ago seemed like a pretty simple task. He wanted to build a rig to move the dartboard’s bullseye to meet the predicted impact of any throw. Seems simple, but it turns out to be rather difficult, especially when you choose to roll your own motion capture system.

In fairness, what [Mark Rober] started three years ago seemed like a pretty simple task. He wanted to build a rig to move the dartboard’s bullseye to meet the predicted impact of any throw. Seems simple, but it turns out to be rather difficult, especially when you choose to roll your own motion capture system.