Modern retrocomputing tricks often push old hardware and systems further than any of the back-in-the-day developers could have ever dreamed. How about a neural network on an original Mac? [KenDesigns] does just this with a classic handwritten digit identification network running with an entire custom SDK!

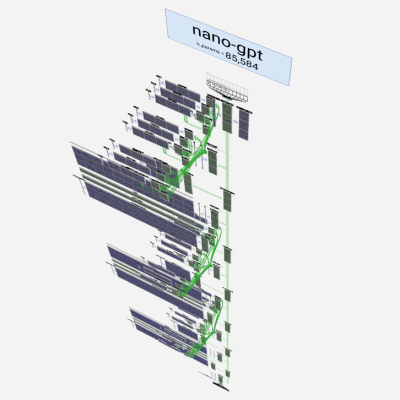

Getting such a piece of hardware running what is effectively multiple decades of machine learning is as hard as most could imagine. (The MNIST dataset used wasn’t even put together until the 90s.) Due to floating-point limitations on the original Mac, there are a variety of issues with attempting to run machine learning models. One of the several hoops to jump through required quantization of the model. This also allows the model to be squeezed into the limited RAM of the Mac.

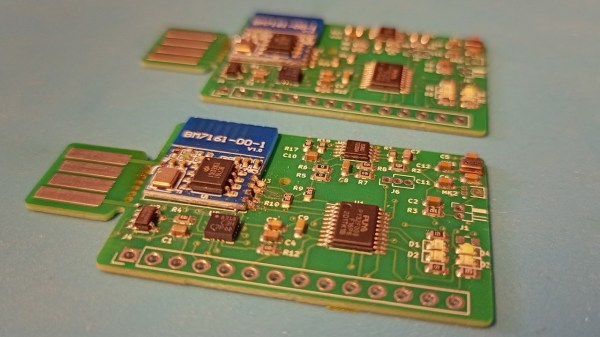

Impressively, one of the most important features of [KenDesigns] setup is the custom SDK, allowing for the lack of macOS. This allows for incredibly nitty-gritty adjustments, but also requires an entire custom installation. Not all for nothing, though, as after some training manipulation, the model runs with some clear proficiency.

If you want to see it go, check out the video embedded below. Or if you just want to run it on your ancient Mac, you’ll find a disk image here. Emulators have even been tested to work for those without the original hardware. Newer hardware traditionally proves to be easier and more compact to use than these older toys; however, it doesn’t make it any less impressive to run a neural network on a calculator!

Continue reading “Original Mac Limitations Can’t Stop You From Running AI Models”

![An image of a light grey graphing calculator with a dark grey screen and key surround. The text on the monochrome LCD screen shows "Input: ENEB Result 1: BEEN Confidence 1: 14% [##] Result 2: Good Confidence 2: 12% [#] Press ENTER key..."](https://hackaday.com/wp-content/uploads/2025/07/Hermes-Optimus-TI-84-Plus-Silver-Edition-Neural-Network-YouTube-0-0-52.jpeg?w=600&h=450)