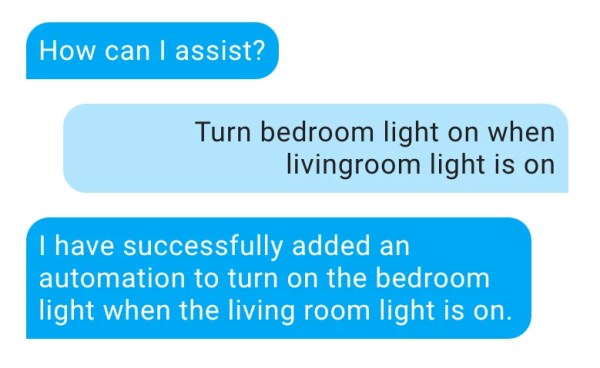

‘Hearing voices’ doesn’t have to be worrisome, for instance when software-defined radio (SDR) happens to be your hobby. It can take quite some of your time and attention to pull voices from the ether and decode them. Therefore, [theckid] came up with a nifty solution: RadioTranscriptor. It’s a homebrew Python script that captures SDR audio and transcribes it using OpenAI’s Whisper model, running on your GPU if available. It’s lean and geeky, and helps you hear ‘the voice in the noise’ without actively listening to it yourself.

This tool goes beyond the basic listening and recording. RadioTranscriptor combines SDR, voice activity detection (VAD), and deep learning. It resamples 48kHz audio to 16kHz in real time. It keeps a rolling buffer, and only transcribes actual voice detected from the air. It continuously writes to a daily log, so you can comb through yesterday’s signal hauntings while new findings are being logged. It offers GPU support with CUDA, with fallback to CPU.

It sure has its quirks, too: ghost logs, duplicate words – but it’s dead useful and hackable to your liking. Want to change the model, tweak the threshold, add speaker detection: the code is here to fork and extend. And why not go the extra mile, and turn it into art?