When interacting with reality at a distance is the best course of action, we turn to robots. Whether that’s exploring the surface of Venus, the depths of the ocean, or (for the time being) society at large, it’s often better to put a robot out there than an actual human being. We can’t all send robots to other planets, but we can easily get them in various other places with telepresence robots.

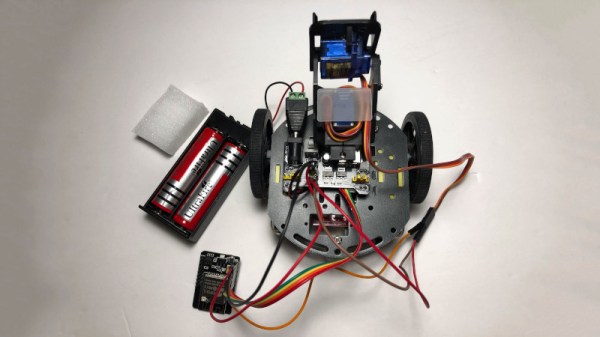

This tiny telepresence robot comes to us from [Ross] at [Crafty Robot] who is using their small Smartibot platform as a basis for this tiny robot. The smartibot drives an easily-created cardboard platform, complete with wheels, and trucks around a smartphone of some sort which handles the video and network capabilities. The robot can be viewed and controlled from any other computer using a suite of web applications that can be found on the project page.

The Smartibot platform is an inexpensive platform that we’ve seen do other things like drive an airship, and the creators are hoping that as many people as possible can get some use out of this quick-and-easy telepresence robot if they really need something like this right now. The kit seems like it would be useful for a lot of other fun projects as well.