The humble mouse has been an essential part of the desktop computing experience ever since the original Apple Macintosh popularized it in 1984. While mice enabled user-friendly GUIs, thus making computers accessible to more people than ever, they also caused a significant increase in repetitive strain injuries (RSI). Mainly caused by poor posture and stress, RSI can lead to pain, numbness and tingling sensations in the hand and arm, which the user might only notice when it’s too late.

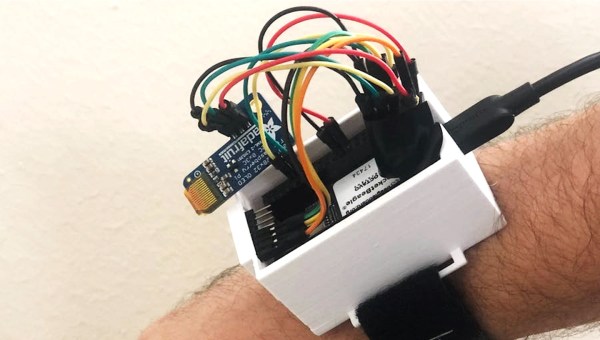

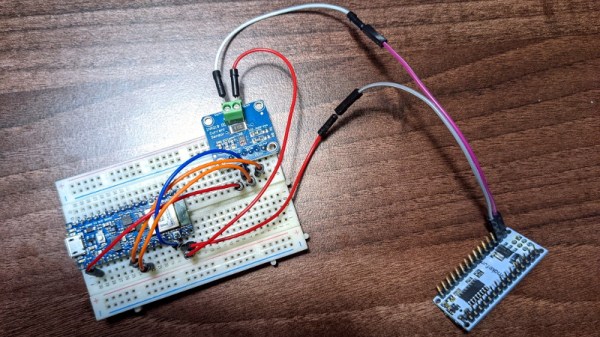

Hoping to catch signs of RSI before it manifests itself, [kutluhan_aktar] built a device that allows him to track mouse fatigue. It does so through two sensors: one that measures galvanic skin response (GSR) and another that performs electromyography (EMG). Together, these two measurements should give an indication of the amount of muscle soreness. The sensor readout circuits are connected to a Wio Terminal, a small ARM Cortex-M4 development board with a 2.4″ LCD.

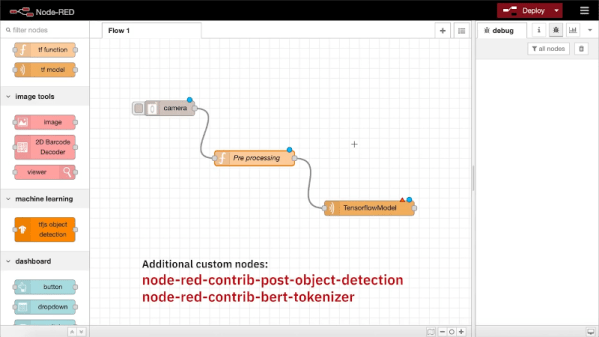

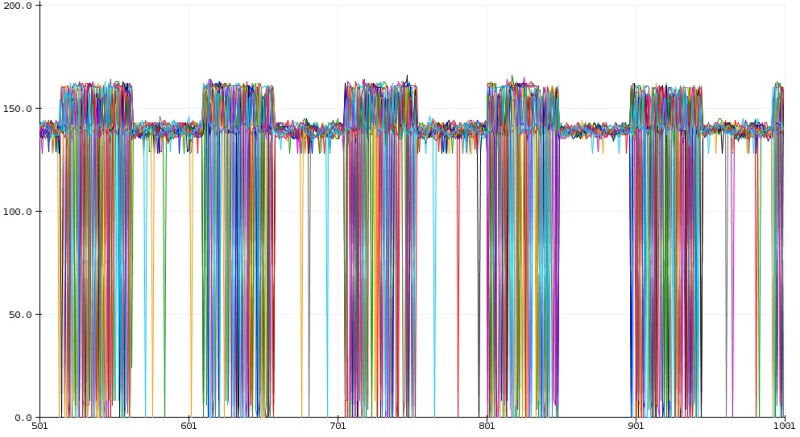

However, calculating muscle soreness is not as simple as just adding a few numbers together; in fact the link between the sensor data and the muscles’ state of health is complicated enough that [kutluhan] decided to train a TensorFlow artificial neural network (ANN), taking into account observed stress levels collected in real life. The network ran on the Wio while he used the mouse, pressing buttons to indicate the amount of stress he experienced. After a few rounds of training he ended up with a network that reached an accuracy of more than 80%.

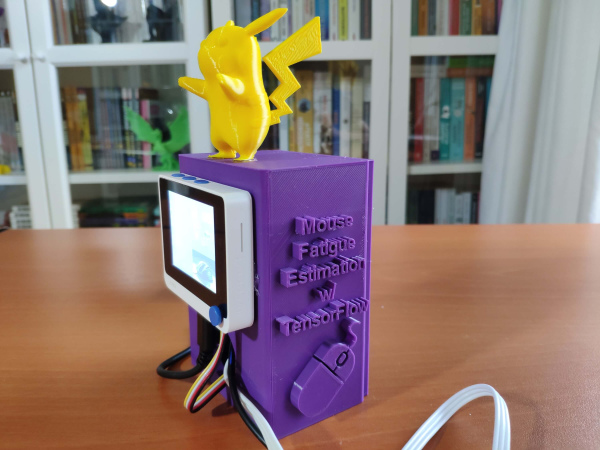

[kutluhan] also designed a rather neat 3D printed enclosure to house the sensor readout boards as well as a battery to power the Wio Terminal. Naturally, the case was graced by a 3D rendition of Pikachu on top (get it? a mouse Pokémon that can paralyze its opponents!). We’ve seen [kutluhan]’s fondness for Pokémon-themed projects in his earlier Jigglypuff CO2 sensor.

Although the setup with multiple sensors doesn’t seem too practical for everyday use, the Mouse Fatigue Estimator might be a useful tool to train yourself to keep good posture and avoid stress while using a mouse. If you also use a keyboard (and who doesn’t?), make sure you’re using that correctly as well.

Continue reading “Avoid Repetitive Strain Injury With Machine Learning – And Pikachu”