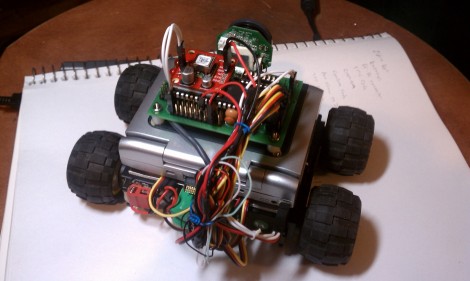

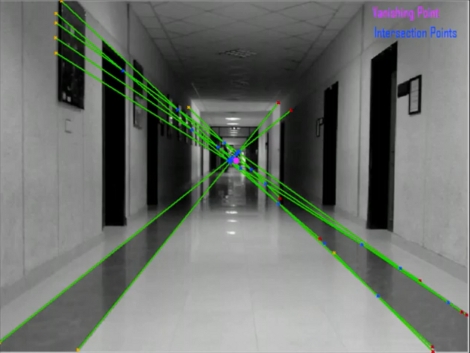

Students at the National University of Computer and Emerging Sciences in Pakistan have been working on a robot to assist the visually impaired. It looks pretty simple, just a mobile base that carries a laptop and a webcam. The bot doesn’t have a map of its environment, but instead uses vanishing point guidance. As you can see in the image above, each captured frame is analyzed for indicators of perspective, which can be extrapolated all the way to the vanishing point where the green lines above intersect. Here it’s using stripes on the floor, as well as the corners where the walls meet the ceiling to establish these lines. From the video after the break you can see that this method works, and perhaps with a little bit of averaging they could get the bot to drive straight with less zig-zagging.

Similar work on vanishing point navigation is being done at the University of Minnesota. [Pratap R. Tokekar’s] robot can also be seen after the break, zipping along the corridor and even making turns when it runs out of hallway.