The loss of one’s sense of hearing or vision is likely to be devastating in the way that it impacts daily life. Fortunately many workarounds exist using one’s remaining senses — such as sign language — but what if not only your sense of hearing is gone, but you are also blind? Fortunately here, too, a workaround exists in the form of tactile signing, which is akin to visual sign language, except that it uses one’s sense of touch. This generally requires someone who knows tactile sign language to translate from spoken or written forms to tactile signaling. Yet what if you’re deaf-blind and without human assistance? This is where a new robotic system could conceivably fill in.

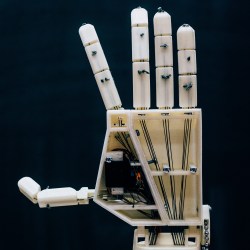

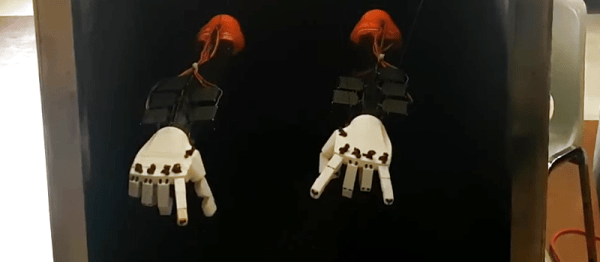

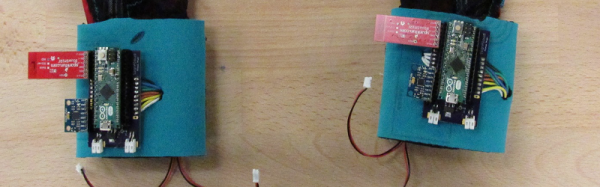

Developed by Tatum Robotics, the Tatum T1 is a a robotic hand and associated software that’s intended to provide this translation function, by taking in natural language information, whether spoken, written or in some digital format, and using a number of translation steps to create tactile sign language as output, whether it’s the ASL format, the BANZSL alphabet or another. These tactile signs are then expressed using the robotic hand, and a connected arm as needed, ideally using ASL gloss to convey as much information as quickly as possible, not unlike with visual ASL.

This also answers the question of why one would not just use a simple braille cell on a hand, as the signing speed is essential to keep up with real-time communications, unlike when, say, reading a book or email. A robotic companion like this could provide deaf-blind individuals with a critical bridge to the world around them. Currently the Tatum T1 is still in the testing phase, but hopefully before long it may be another tool for the tens of thousands of deaf-blind people in the US today.

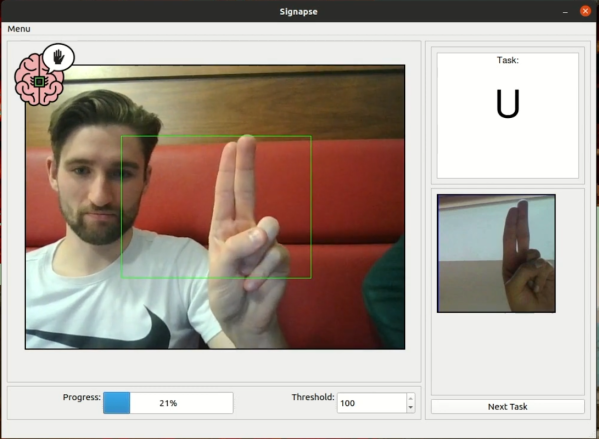

A team of students in Antwerp, Belgium are responsible for

A team of students in Antwerp, Belgium are responsible for