The old adage that you’ll make a fortune by developing a better mouse trap is not super realistic, as the engineers behind Sony’s Betamax video tape standard could tell you. However, you can still learn a lot building your own, as this project from [ROBO HUB] demonstrates.

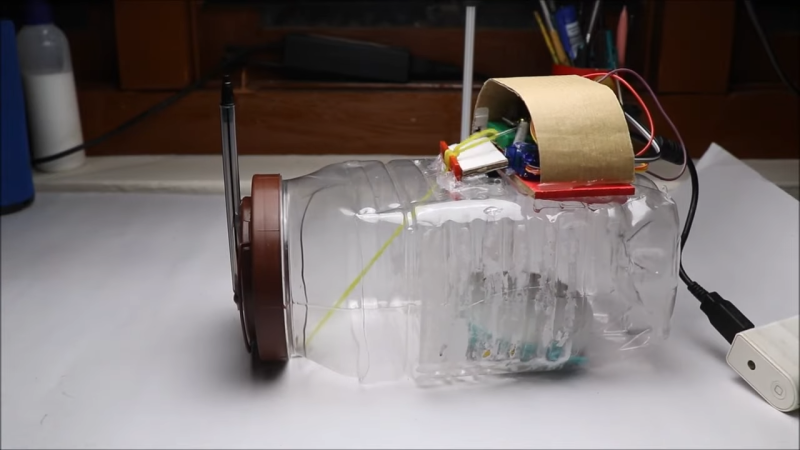

The trap is intended to catch mice in a humane fashion, without injury to the animal. To that end, it uses an Arduino Nano armed with an ultrasonic distance sensor to detect when mice have entered a plastic container. The container’s hinged door is is held open with a servo. When a mouse is detected, the servo trips the door to snap shut under the power of an elastic band.

The key to making this design work well is ensuring that there are no gaps in the closed container that the mouse can use to escape. They’re wily creatures able to squeeze through positively tiny spaces, so it’s important to get this right. Besides that, you want to check the trap regularly, lest any caught mice simply claw and chew their way out.

We’ve seen a few mousetraps around these parts before, too. Video after the break.