[Dan Schnur] has a simple strategy to ensure their business card stays on the client’s desk and doesn’t just get lobbed in a drawer: make it into a simple gaming platform. This entry into the 2024 Business Card Challenge is based around the tinyjoypad project, integrating an SSD1306 OLED display, joypad, and push button.

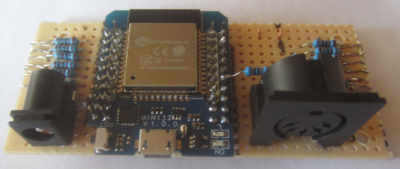

Powered by the superstar ATTiny85, the electronics are really not all that much, just a sprinkling of passives to support the display and the six switch inputs from the joystick and push button. Or at least, that’s how much we can glean from the PCB images, as the PCB design files are not provided in the project GitHub.

Leaving the heavy lifting of the software to the tinyjoypad project, the designer can concentrate on the actual job at hand and the reason the business card exists to stay at the forefront of the client’s mind. In the meantime, the card can be a useful distraction for those idle moments. A few such distractions include a tiny version of Missile Command (as shown above), tiny tris, and a very cut-down Q-bert. Sadly, that last game isn’t quite the same without that distinctive sound.

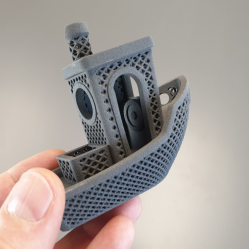

settled upon a simple linear arrangement of beams held within a laser-cut wooden box frame. Since these laser modules are quite small, some aluminium rod was machined to make some simple housings to push them into, making them easier to mount in the frame and keeping them nicely aligned with their corresponding LDR.

settled upon a simple linear arrangement of beams held within a laser-cut wooden box frame. Since these laser modules are quite small, some aluminium rod was machined to make some simple housings to push them into, making them easier to mount in the frame and keeping them nicely aligned with their corresponding LDR.

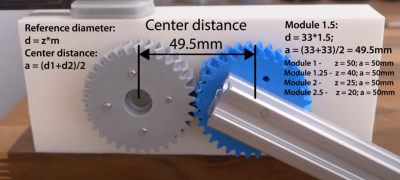

durability and increase noise. On the other hand, helical gears have a more gradual engagement, resulting in reduced noise and smoother operation. This leads to an increased load-carrying capacity, thus improving durability and lifespan.

durability and increase noise. On the other hand, helical gears have a more gradual engagement, resulting in reduced noise and smoother operation. This leads to an increased load-carrying capacity, thus improving durability and lifespan.