[3DPrintedLife aka Andrew DeGonge] saw that advert for gatorade that shows some slick stop-motion animation using a so-called ‘liquid printer’ and wondered how they built the machine and got it to work so well. The answer, it would seem, involves a lot of hard work and experimentation.

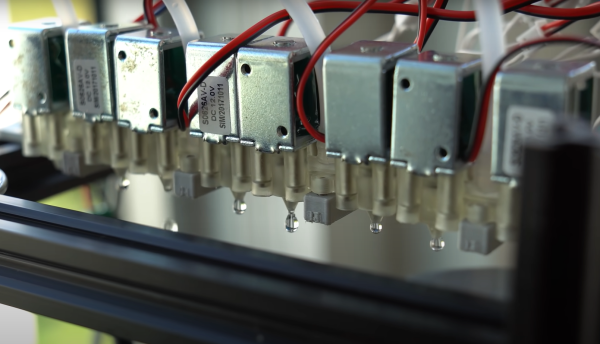

Conceptually it’s not hard to grasp. A water reservoir sits at the top, which gravity-feeds into a a series of electromechanical valves below, which feed into nozzles. From there, the timing of the valve and water pressure dictate the droplet size. The droplets fall under the influence of gravity, to be collected at the bottom. From that point it’s a ‘simple’ matter of timing droplets with respect to a lighting strobe or camera shutter and hey-presto! instant animation.

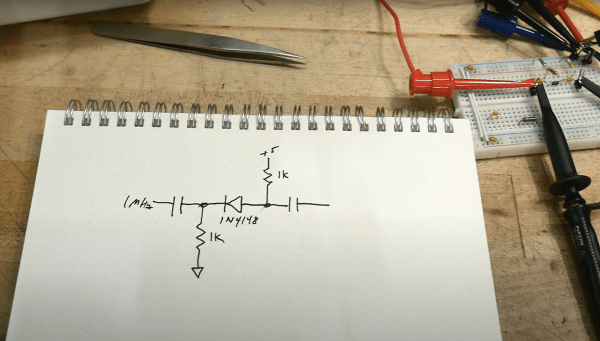

As will become evident from the video, it’s just not as easy as that. After an initial wobble when [Andrew] realised that cheap “air-only” solenoids actually are for air-only when they rusted up, he took a slight detour to design and 3D print his own valve body. Using a resin printer to produce fine detailed prints, enabled the production of small internal passages including an ‘air spring’ which is just a small chamber of air. After a lot of testing, proved to be a step in the right direction. Whether this could have been achieved with an FDM printer, is open to speculation, but we suspect the superior fine detail capabilities of modern resin printers are a big help here.

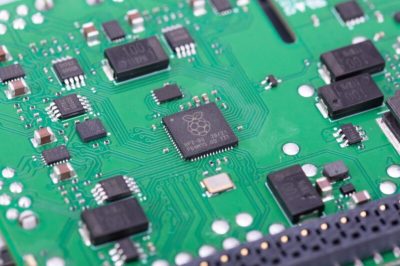

In a nice twist, [Andrew] ripped open and dissolved a fluorescent marker pen, and used that in place of plain water, so when illuminated with suitably triggered UV LED strips, discernable animation was achieved, with an eerie green glow which we think looks pretty neat. All he needs to do now is upgrade the hardware to make a 3D array with more resolution, and he can start approaching the capability of the thing that inspired him. Work on some custom electronics to drive it has started, so this is one to watch in the coming months!

We’ve seen many water-based display device before, like this one that projects directly onto a thin stream of water, and this strangely satisfying hack using paraffin and water, but a full 3D Open Source display device seems elusive so far.

All project details can be found on the associated GitHub.

Continue reading “Gravity-Defying Water Drop Display Shows Potential” →