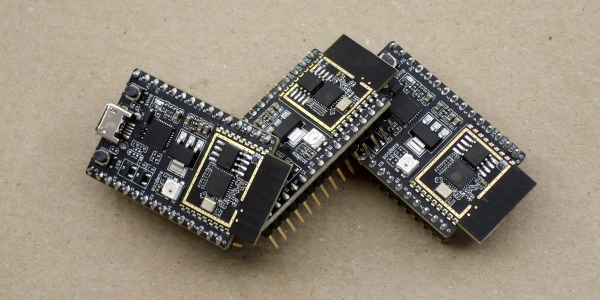

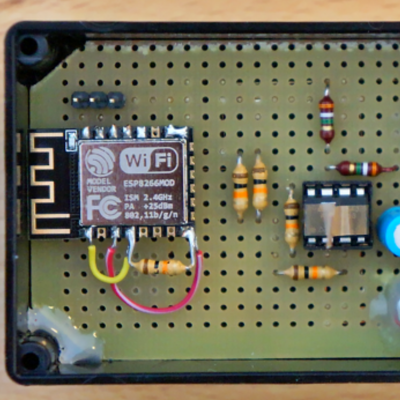

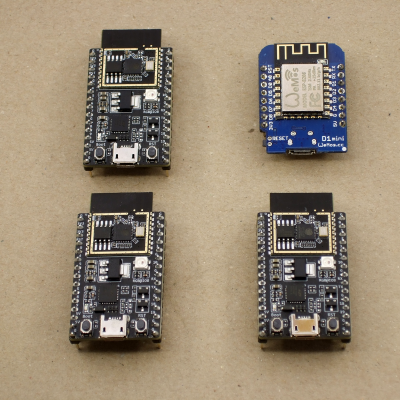

We just got our hands on some engineering pre-samples of the ESP32-C3 chip and modules, and there’s a lot to like about this chip. The question is what should you compare this to; is it more an ESP32 or an ESP8266? The new “C3” variant has a single 160 MHz RISC-V core that out-performs the ESP8266, and at the same time includes most of the peripheral set of an ESP32. While RAM often ends up scarce on an ESP8266 with around 40 kB or so, the ESP32-C3 sports 400 kB of RAM, and manages to keep it all running while burning less power. Like the ESP32, it has Bluetooth LE 5.0 in addition to WiFi.

Espressif’s website says multiple times that it’s going to be “cost-effective”, which is secret code for cheap. Rumors are that there will be eight-pin ESP-O1 modules hitting the streets priced as low as $1. We usually require more pins, but if medium-sized ESP32-C3 modules are priced near the ESP8266-12-style modules, we can’t see any reason to buy the latter; for us it will literally be an ESP8266 killer.

On the other hand, it lacks the dual cores of the ESP32, and simply doesn’t have as many GPIO pins. If you’re a die-hard ESP32 abuser, you’ll doubtless find some features missing, like the ultra-low-power coprocessor or the DACs. But it does share a lot of the ESP32 standouts: the LEDC (PWM) peripheral and the unique parallel I2S come to mind. Moreover, it shares the ESP-IDF framework with the ESP32, so despite running on an entirely different CPU architecture, a lot of code will run without change on both chips just by tweaking the build environment with a one-liner.

If you were confused by the chip’s name, like we were, a week or so playing with the new chip will make it all clear. The ESP32-C3 is a lot more like a reduced version of the ESP32 than it is like an improvement over the ESP8266, even though it’s probably destined to play the latter role in our projects. If you count in the new ESP32-S3 that brings in USB, the ESP32 family is bigger than just one chip. Although it does seem odd to lump the RISC-V and Tensilica CPUs together, at the end of the day it’s the peripherals more than the CPUs that differentiate microcontrollers, and on that front the C3 is firmly in the ESP32 family.

Our takeaway: the ESP32-C3 is going to replace the ESP8266 in our projects, but it won’t replace the ESP32 which simply has more of everything when we need it. The shared codebase and peripheral architecture makes it easier to switch between the two when we don’t need the full-blown ESP32. In that spirit, we welcome the newcomer to the family.

But naturally, we’ve got a lot more to say about it. Specifically, we were interested in exactly what the RISC-V core brought to the table, and ran the module through power and speed comparisons with the ESP32 and ESP8266 — and it beats them both by a small margin in our benchmarks. We’ve also become a lot closer friends with the ESP-IDF SDK that all of the ESP32 family chips use, and love how far it has come in the last year or so. It’s not as newbie-friendly as ESP-Arduino, for sure, but it’s a ton more powerful, and we’re totally happy to leave the ESP8266 SDK behind us.

Continue reading “Hands-On: The RISC-V ESP32-C3 Will Be Your New ESP8266”