Ever wonder what your favorite board game sounds like? Neither did we. Thankfully [Sara Adkins] did, and created a step sequencer called Let’s Go that uses the classic board game Go as input.

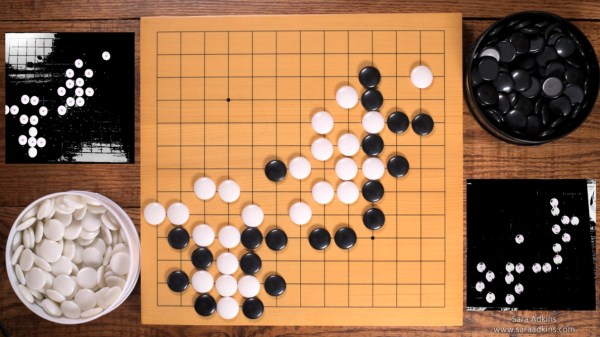

In the game Go, two players place black and white tokens on a grid, vying for control of the board. As the game progresses, the configuration of game pieces gets more complex and coincidentally begins to resemble Conway’s Game of Life (or a weird QR Code). Sara saw music in the evolving arrangement of circles and transformed the ancient board game into a modern instrument so others could hear it too.

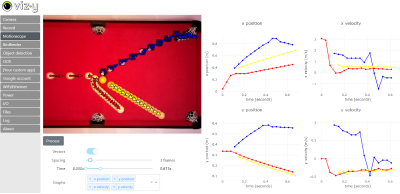

To an observer, [Sara’s] adaptation looks fairly indistinguishable from the version played in China 2,500 years ago — with the exception of an overhead webcam and nearby laptop, of course. The laptop uses OpenCV to digitize the board layout. It feeds that information via Open Sound Control (OSC) into popular music creation software Max MSP (though an open-source version could probably be implemented in Pure Data), where it’s used to control a step sequencer. Each row on the board represents an instrumental voice (melodic for white pieces, percussive for black ones), and each column corresponds to a beat.

Every new game is a new piece of music that starts out simple and gradually increases in complexity. The music evolves with the board, and adds a new dimension for players to interact with the game. If you want to try it out yourself, [Sara] has the project fully documented on her website, and all of the code is available on GitHub. Now we’re just left wondering what other games sound like — [tinkartank] already answered that question for chess, but what about Settlers of Catan?

Continue reading “Making Music With A Go Board Step Sequencer”

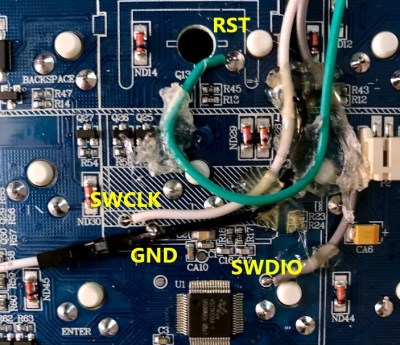

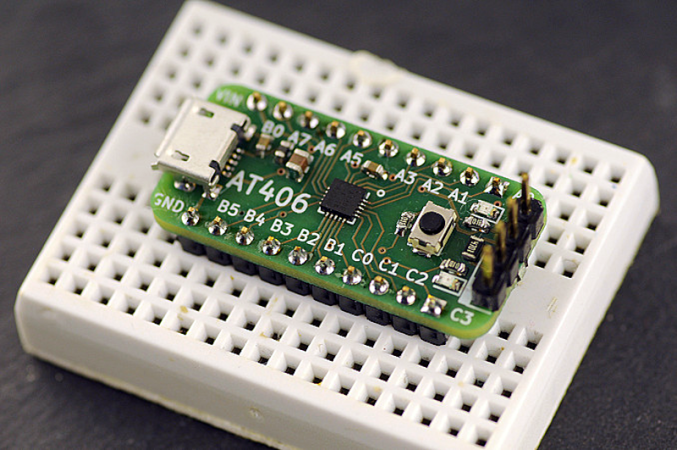

That is the point of [Jake Ammons’] attention-getting lighthouse, designed and built in two weeks’ time for Architectural Robotics class. It detects ambient noise and responds to it by focusing light in the direction of the sound and changing the color of the light to a significant shade to indicate different events. Up inside the lighthouse is a Teensy 4.0 to read in the sound and spin a motor in response.

That is the point of [Jake Ammons’] attention-getting lighthouse, designed and built in two weeks’ time for Architectural Robotics class. It detects ambient noise and responds to it by focusing light in the direction of the sound and changing the color of the light to a significant shade to indicate different events. Up inside the lighthouse is a Teensy 4.0 to read in the sound and spin a motor in response.