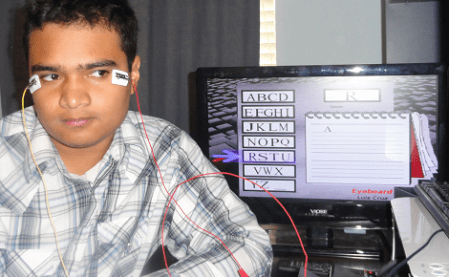

The applications of eye-tracking devices are endless, which is why we always get excited to see new techniques in measuring the absolute position of the human eye. Cornell students [Michael and John] took on an interesting approach for their final project and designed a phototransistor based eye-tracking system.

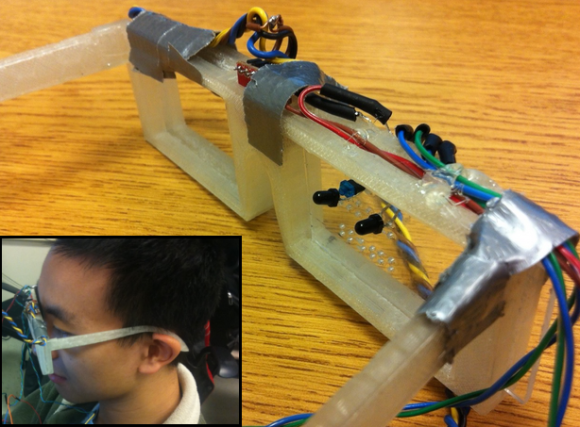

We can definitely see the potential of this project, but for their first prototype, the system relies on both eye-tracking and head movement to fully control a mouse pointer. An end-product design was in mind, so the system consists of both a pair of custom 3D printed glasses and a wireless receiver; thus avoiding the need to be tethered to the computer under control . The horizontal position of the mouse pointer is controlled via the infrared eye tracking mechanism, consisting of an Infrared LED positioned above the eye and two phototransistors located on each side of the eye. The measured analog data from the phototransistors determine the eye’s horizontal position. The vertical movement of the mouse pointer is controlled with the help of a 3-axis gyroscope mounted to the glasses. The effectiveness of a simple infrared LED/phototransistor to detect eye movement is impressive, because similar projects we’ve seen have been camera based. We understand how final project deadlines can be, so we hope [Michael and John] continue past the deadline with this one. It would be great to see if the absolute position (horizontal and vertical) of the eye can be tracked entirely with the phototransistor technique.