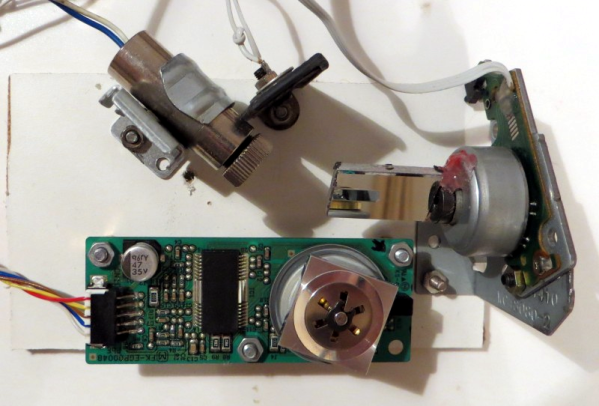

Ten years is almost ancient history in the computing world. Going back twelve years is almost unheard of, but that’s about the time that Palm released the last version of their famed PalmOS, an operating system for small, handheld devices that predated Apple’s first smartphone by yet another ten years. As with all pieces of good software there remain devotees, but with something that hasn’t been updated in a decade there’s a lot of work to be done. [Dmitry.GR] set about doing that work, and making a workable Palm device for the modern times.

He goes into incredible detail on this build, but there are some broad takeaways from the project. First, Palm never really released all of the tools that developers would need to build software easily, including documentation of the API system. Since a new device is being constructed, a lot of this needs to be sorted out. Even a kernel was built from scratch for this project, since using a prebuilt one such as Linux was not possible. There were many other pieces of software needed in order to get a working operating system together running on an ARM processor, which he calls rePalm.

There are many other facets of this project that we aren’t able to get into in this limited space, but if you’re at all interested in operating systems or if you fondly remember the pre-smartphone era devices such the various Palm PDAs that were available in the late ’90s and early ’00s, it’s worth taking a look at this one. And if you’d like to see [Dmitry.GR]’s expertise with ARM, he is well-versed.

Thanks to [furre] for the tip!

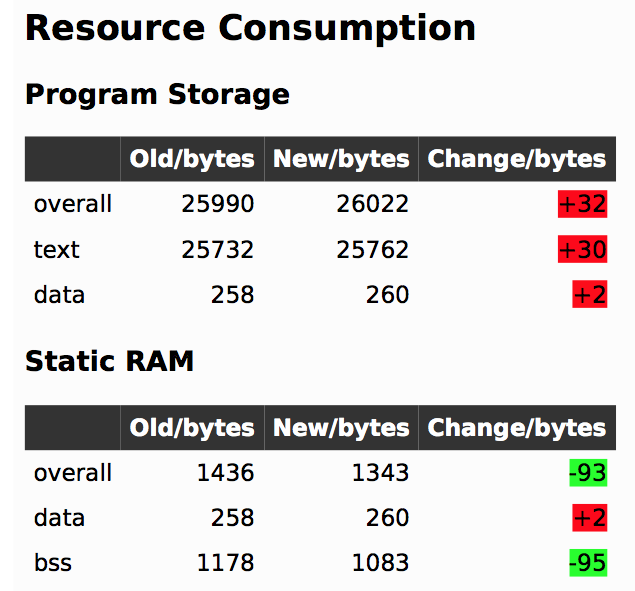

In firmware-land, where flash space can be limited, it’s nice to keep a handle on code size. This can be done a number of ways. Manual inspection of .map files (colloquially “mapfiles”) is the easiest place to start but not conducive to automatic tracking over time. Mapfiles are generated by the linker and track the compiled sizes of object files generated during build, as well as the flash and RAM layouts of the final output files.

In firmware-land, where flash space can be limited, it’s nice to keep a handle on code size. This can be done a number of ways. Manual inspection of .map files (colloquially “mapfiles”) is the easiest place to start but not conducive to automatic tracking over time. Mapfiles are generated by the linker and track the compiled sizes of object files generated during build, as well as the flash and RAM layouts of the final output files.