The Yamaha DX7 was released in 1983, with its FM synthesis engine completely revolutionizing the electronic music world at the time. It didn’t come cheap, and still doesn’t today, but we are blessed with emulators that can give us the same sound on a budget. In that vein, [Kevin] decided to whip up a Yamaha DX7 you can carry around in a little USB dongle.

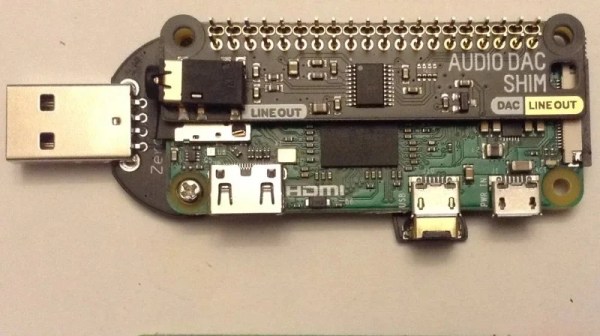

The build centers around the use of a Raspberry Pi Zero, Zero W, or Zero 2W configured to run the MiniDEXED DX7 emulator. The Pi is then set up with a dongle adapter board that allows it to run in USB Gadget mode. The Zero line of Raspberry Pis are perfect for this use, as they draw less current and so can, under the right conditions, run off a computer’s USB port. The Pi receives MIDI commands over the USB interface, and outputs sound via a Pimoroni Audio Shim. Effectively, the result is a single-channel DX7 synth that plugs in via USB; or eight channels if you use the more powerful Zero 2W.

[Kevin] readily admits that there probably isn’t much use for a DX7 dongle, given that you could just load a DX7 emulator in your DAW of choice instead. Regardless, it’s a fun build, and one that ably demonstrates the USB Gadget mode of operation for the Raspberry Pi. Video after the break. Continue reading “A Yamaha DX7 On A USB Dongle”