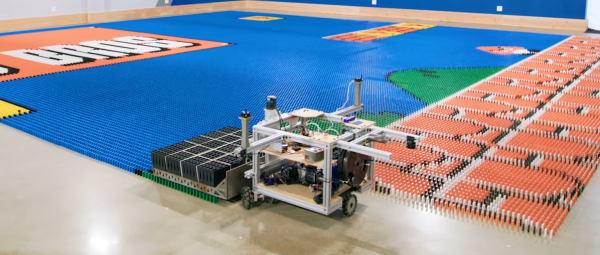

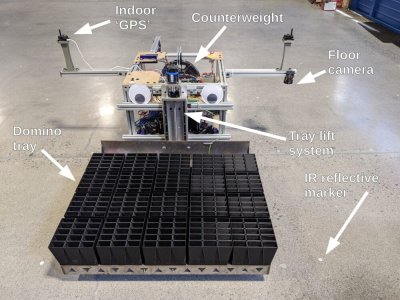

Creating large domino art displays is a long and nerve-racking process, where bumping a single domino can mean starting from scratch. To automate the process of creating these displays, a team consisting of [Mark Rober], [John Luke], [Josh], and [Alex Baucom] built the Dominator, a robot capable of laying 100 000 dominos just over 24 hours. Video after the break.

[Mark Rober] had been toying with the idea for a few years, and the project finally for off the ground after [Mark] mentioned it in a talk he gave at the 2019 Bay Area Maker Faire. To pull it off, the team created an entire domino laying system, including an automated loading station, a precision indoor positioning system, and the robot itself. The robot is built around a frame of aluminum extrusions, riding on three omnidirectional wheels driven by precision servo motors. A large tray mounted to the front of the robot can hold and release 300 dominos at a time. The primary controller is a Raspberry Pi 4, which receives positioning information from a Marvelmind indoor positioning system and a downward-facing IR camera that looks for reflective markers on the floor. The loading system uses a conveyor system to feed the different colored dominos to an industrial Kuka robot that drops them down a grid of tubes that can hold multiple layers at once.

Continue reading “Create Large Scale Domino Art With A Robot”