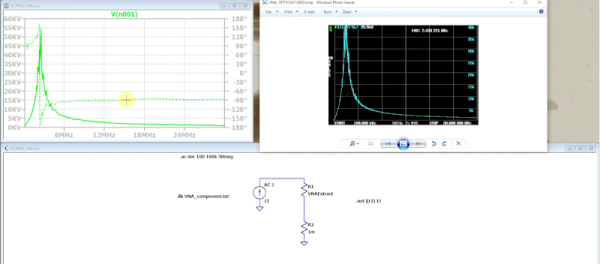

We always enjoy [FesZ’s] videos, and his latest about FREQ function in LTSpice is no exception. In fact, LTSpice doesn’t document it, but it is part of the underlying Spice system. So, of course, you can figure it out or just watch the video below. The FREQ keyword allows you to change component attributes in a frequency-depended way.

Of course, capacitors and inductors are frequency dependent by design. But the FREQ technique allows you to adjust things like voltage sources or resistance in arbitrary ways. By default, you must specify the frequency response data in decibels, which isn’t always convenient. However, [FesZ] shows you how to use other methods to express them using modifiers to the command.