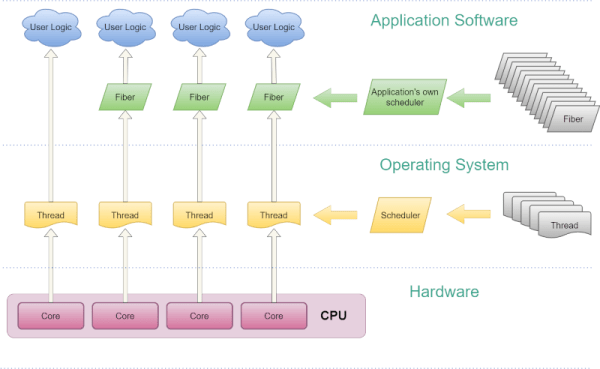

Embedded programming is a tricky task that looks straightforward to the uninitiated, but those with a few decades of experience know differently. Getting what you want to work predictably or even fit into the target can be challenging. When you get to a certain level of complexity, breaking code down into multiple tasks can become necessary, and then most of us will reach for a real-time operating system (RTOS), and the real fun begins. [Aleksei Tertychnyi] clearly understands such issues but instead came up with an alternative they call ANTIRTOS.

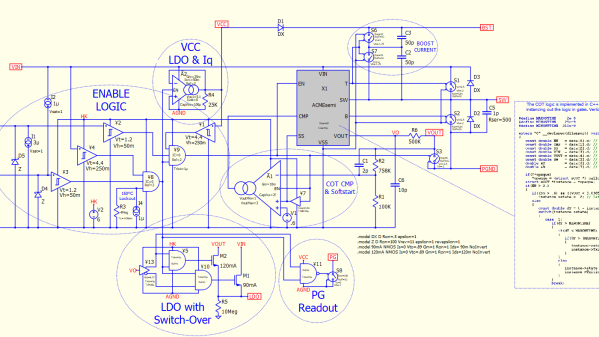

The idea behind the project is not to use an RTOS at all but to manage tasks deterministically by utilizing multiple queues of function pointers. The work results in an ultra-lightweight task management library targeting embedded platforms, whether Arduino-based or otherwise. It’s pure C++, so it generally doesn’t matter. The emphasis is on rapid interrupt response, which is, we know, critical to a good embedded design. Implemented as a single header file that is less than 350 lines long, it is not hard to understand (provided you know C++ templates!) and easy to extend to add needed features as they arise. A small code base also makes debugging easier. A vital point of the project is the management of delay routines. Instead of a plain delay(), you write a custom version that executes your short execution task queue, so no time is wasted. Of course, you have to plan how the tasks are grouped and scheduled and all the data flow issues, but that’s all the stuff you’d be doing anyway.

The GitHub project page has some clear examples and is the place to grab that header file to try it yourself. When you really need an RTOS, you have a lot of choices, mostly costing money, but here’s our guide to two popular open source projects: FreeRTOS and ChibiOS. Sometimes, an RTOS isn’t enough, so we design our own full OS from scratch — sort of.