Anybody born before the mid 1990s will likely remember film cameras being used to document their early years. Although the convenience of digital cameras took over and were then themselves largely usurped by mobile phones, there is still a surprising variety of photographic film being produced. Despite the long pedigree, how many of us really know what goes into making what is a surprisingly complex and exacting product? [Destin] from SmarterEveryDay has been to Rochester, NY to find out for himself and you can see the second in a series of three hour-long videos shedding light on what is normally the strictly lights-out operation of film-coating.

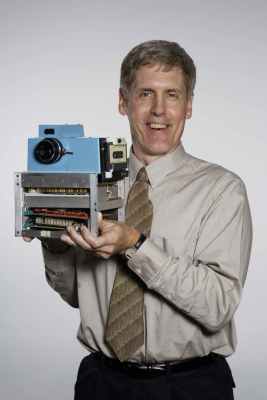

Kodak have been around in one form or another since 1888, and have been producing photographic film since 1889. Around the turn of the Millennium, it looked as though digital photography (which Kodak invented but failed to significantly capitalize on) would kill off film for good, and in 2012 Kodak even went into Chapter 11 bankruptcy, which gave it time to reorganize the business.

They dramatically downsized their film production to meet what they considered to be the future demand, but in a twist of fortunes, sales have surged in the last five years after a long decline. So much so, in fact, that Kodak have gradually grown from running a single shift five days per week a few years ago, to a 24/7 operation now. They recently hired 300 Film Technicians and are still recruiting for more, to meet the double-digit annual growth in demand.

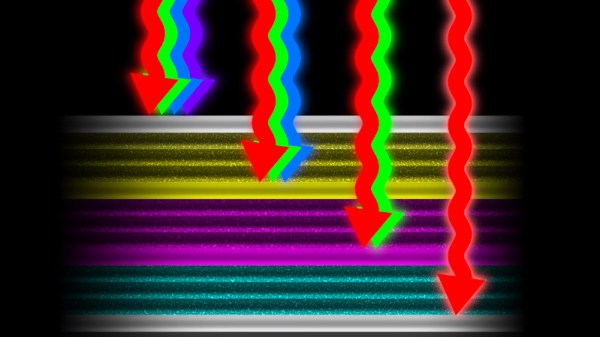

[Destin] goes to great lengths to explain the process, including making a 3D model of the film factory, to better visualize the facility, and lots of helpful animations. The sheer number of steps is mind-boggling, especially when you consider the precision required at every step and the fact that the factory runs continuously… in the dark, and is around a mile-long from start to finish. It’s astonishing to think that this process (albeit at much lower volumes, and with many fewer layers) was originally developed before the Wright Brothers’ first powered flight.

We recently covered getting a vintage film scanner to work with Windows 11, and a little while back we showed you the incredible technology used to develop, scan and transmit film images from space in the 1960s.