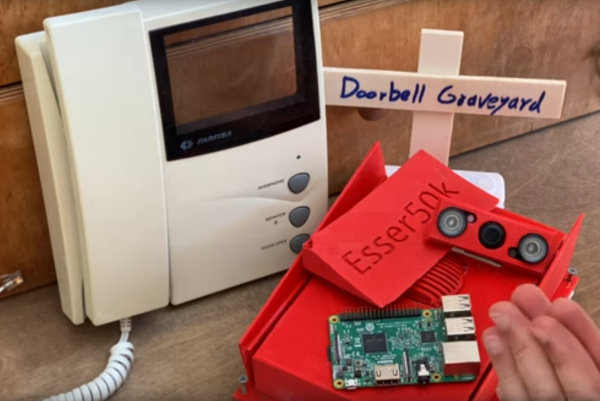

Camera modules for the Raspberry Pi became available shortly after its release in the early ’10s. Since then there has been about a decade of projects eschewing traditional USB webcams in favor of this more affordable, versatile option. Despite the amount of time available there are still some hurdles to overcome, and [Esser50k] has some supporting software to drive a smart doorbell which helps to solve some of them.

One of the major obstacles to using the Pi camera module is that it can only be used by one process at a time. The PiChameleon software that [Esser50k] built is a clever workaround for this, which runs the camera as a service and allows for more flexibility in using the camera. He uses it in the latest iteration of a smart doorbell and intercom system, which uses a Pi Zero in the outdoor unit armed with motion detection to alert him to visitors, and another Raspberry Pi inside with a touch screen that serves as an interface for the whole system.

The entire build process over the past few years was rife with learning opportunities, including technical design problems as well as experiencing plenty of user errors that caused failures as well. Some extra features have been added to this that enhance the experience as well, such as automatically talking to strangers passing by. There are other unique ways of using machine learning on doorbells too, like this one that listens for a traditional doorbell sound and then alerts its user.