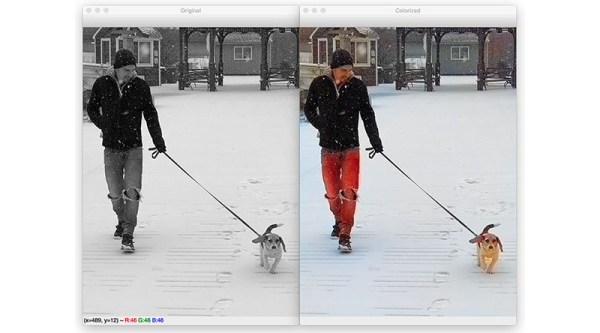

The world was never black and white – we simply lacked the technology to capture it in full color. Many have experimented with techniques to take black and white images, and colorize them. [Adrian Rosebrock] decided to put an AI on the job, with impressive results.

The method involves training a Convolutional Neural Network (CNN) on a large batch of photos, which have been converted to the Lab colorspace. In this colorspace, images are made up of 3 channels – lightness, a (red-green), and b (blue-yellow). This colorspace is used as it better corresponds to the nature of the human visual system than RGB. The model is then trained such that when given a lightness channel as an input, it can predict the likely a & b channels. These can then be recombined into a colorized image, and converted back to RGB for human consumption.

It’s a technique capable of doing a decent job on a wide variety of material. Things such as grass, countryside, and ocean are particularly well dealt with, however more complicated scenes can suffer from some aberration. Regardless, it’s a useful technique, and far less tedious than manual methods.

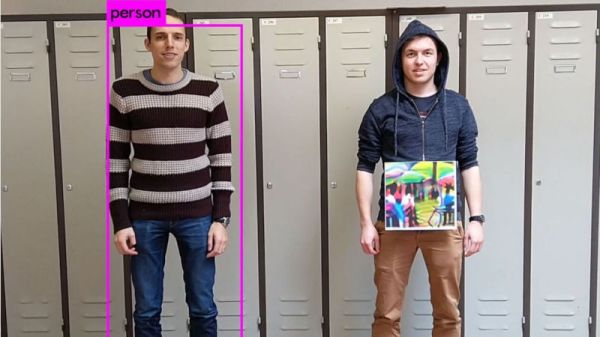

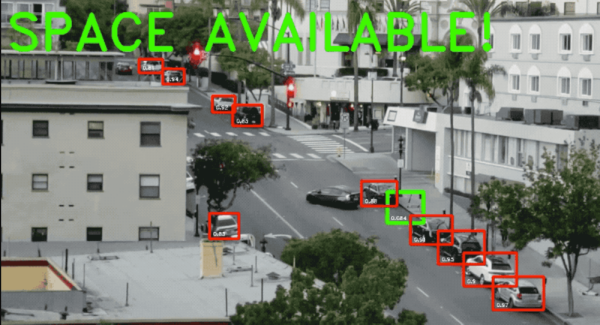

CNNs are doing other great things too, from naming tomatoes to helping out with home automation. Video after the break.