If we count all the screens in our lives, it takes a hot minute. Some of them are touchscreens, some need a mouse or keyboard, but we are accustomed to all the input devices. Not everyone can use the various methods, like cerebral palsy patients who rely on eye-tracking hardware. Traditionally, that only works on the connected computer, so switching from a chair-mounted screen to a tablet on the desk is not an option. To give folks the ability to control different computers effortlessly [Zack Freedman] is developing a head-mounted eye-tracker that is not tied to one computer. In a way, this is like a KVM switch, but way more futuristic. [Tony Stark] would be proud.

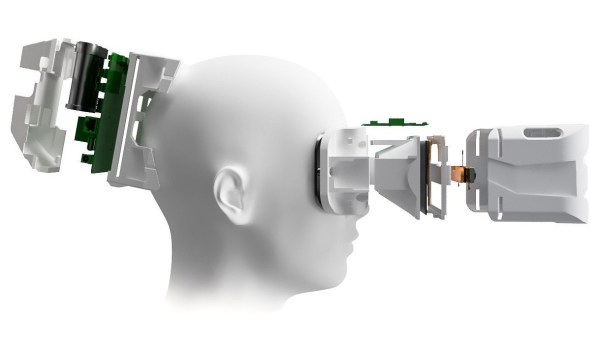

An infrared detector on the headset identifies compatible screens in line of sight and synchs up with its associated HID dongle. A headset-mounted color camera tracks the head position in relation to the screen while an IR camera scans the eye to calculate where the user is focusing. All the technology here is proven, but this new recipe could be a game-changer to anyone who has trouble with the traditional keyboard, mouse, and touchscreen. Maybe QR codes could assist the screen identification and orientation like how a Wii remote and sensor bar work together.