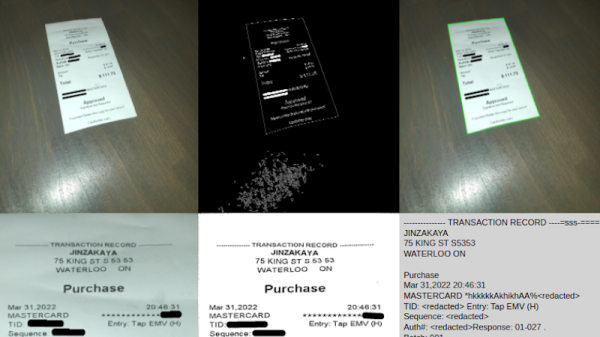

It’s one of those things that certainly sounds simple enough: take a picture of a receipt, run it through optical character recognition (OCR), and send the resulting information to whatever expense-tracking website or software you wish. There are companies that offer such a service, so it can’t be too difficult to replicate on your own…right?

That’s what [Marcel Robitaille] thought when he set out to create his homebrew “Receipt Ingestion” system, anyway. But in reality it took so much time to troubleshoot and implement that he says it would have been faster to just enter in all his receipts by hand. We’re happy he stuck with it though, otherwise you wouldn’t be reading about it on Hackaday, and we wouldn’t be able to learn anything from the detailed account he’s provided.

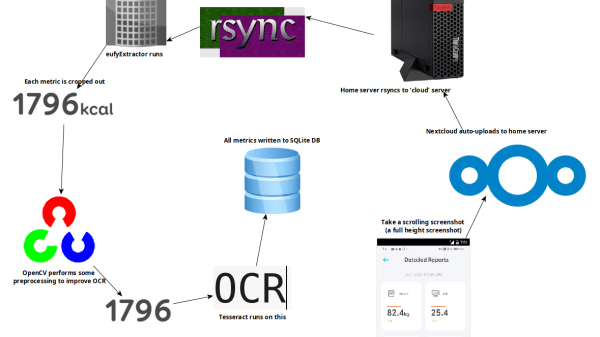

It only took an evening to hack together a rough demo, and the initial results were very promising. The code could detect the edges of the receipt, rotate the captured image appropriately, and then pull out the critical information such as date, total amount, business name, etc. He was then able to decipher the API for Splitwise, an online service for splitting bills, by capturing the data sent by his browser while adding a new bill. With this information, writing up some Python code to push his captured data into the service was trivial. So far, so good.

But like so many horror films that begin with a happy family starting a new life in a beautiful home, there was a monster lurking in the shadows. It’s one thing to capture data from perfectly clean and flat receipts, but quite another to get any useful info out of one that spent half the day crumpled up in your back pocket. The promising proof of concept that worked a treat under controlled conditions failed completely in the real-world, with [Marcel] reporting that only 1 in 5 receipts he tried to scan actually went through.

In the end, [Marcel] realized that the best way to handle the unreliable condition of the receipts was to focus on a different object in the image. He came up with a QR code marker that he could put on the table with the receipt to be scanned, which his software can use as a known point of reference. This greatly improves the reliability of the image rotation and transformation, which in turn makes the OCR more reliable. It also makes it much easier to tell which images need to be scanned — if there’s no QR code found, the software just skips that shot and keeps looking.

The unique challenges of digitizing large amounts of printed content using OCR makes for some fascinating problem solving, and we’re glad [Marcel] shared this particular story with us. While there’s still some edge cases that need chasing down, he’s using the software on a nearly daily basis, and has posted it up on GitHub for anyone who might wish to build on his efforts.

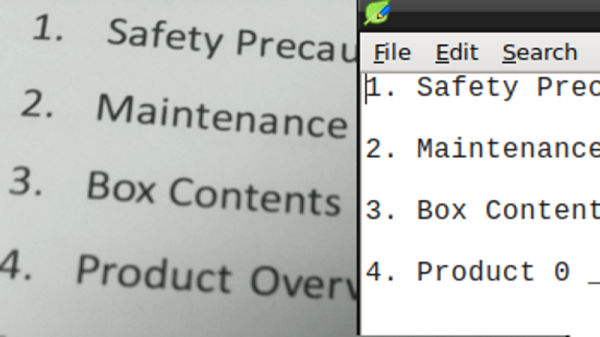

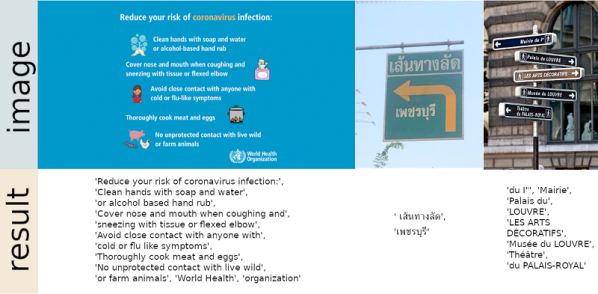

First of all, while OCR can be reliable, it needs the right conditions. One thing that ended up being a big problem was the way the app appends units (kg, %) after the numbers. Not only are they tucked in very close, but they’re about half the height of the numbers themselves. It turns out that mixing and matching character height, in addition to snugging them up against one another, is something tailor-made to give OCR reliability problems.

First of all, while OCR can be reliable, it needs the right conditions. One thing that ended up being a big problem was the way the app appends units (kg, %) after the numbers. Not only are they tucked in very close, but they’re about half the height of the numbers themselves. It turns out that mixing and matching character height, in addition to snugging them up against one another, is something tailor-made to give OCR reliability problems.