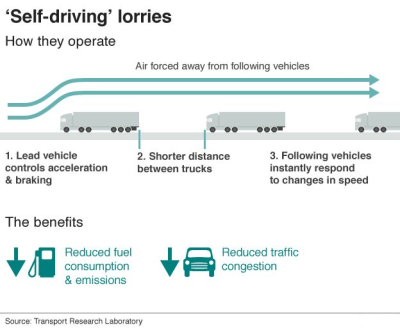

The [BBC] is reporting that driverless semi-trailer trucks or as we call them in the UK driverless Lorries are to be tested on UK roads. A contract has been awarded to the Transport Research Laboratory (TRL) for the trials. Initially the technology will be tested on closed tracks, but these trials are expected to move to major roads by the end of 2018.

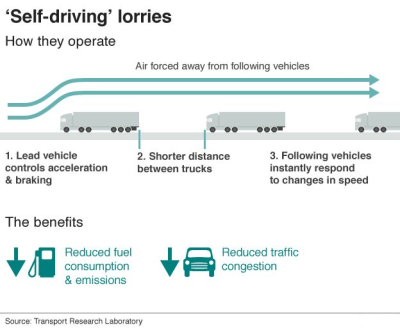

All of these Lorries will be manned and driven in formation of up to three lorries in single file. The lead vehicle will connect to the others wirelessly and control their braking and acceleration. Human drivers will still be present to steer the following lorries in the convoy.

will connect to the others wirelessly and control their braking and acceleration. Human drivers will still be present to steer the following lorries in the convoy.

This automation will allow the trucks to drive very close together, reducing drag for the following vehicles to improve fuel efficiency.”Platooning” as they call these convoys has been tested in a number of countries around the world, including the US, Germany, and Japan.

Are these actually autonomous vehicles? This question is folly when looking toward the future of “self-driving”. The transition to robot vehicles will not happen in the blink of an eye, even if the technological barriers were all suddenly solved. That’s because it’s untenable for human drivers to suddenly be on the road with vehicles that don’t have a human brain behind the wheel. These changes will happen incrementally. The lorry tests are akin to networked cruise control. But we can see a path that will add in lane drift warnings, steering correction, and more incremental automation until only the lead vehicle has a person behind the wheel.

There is a lot of interest in the self driving industry right now from the self driving potato to autonomous delivery. We’d love to hear your vision of how automated delivery will sneak its way into our everyday lives. Tell us what you think in the comments below.

will connect to the others wirelessly and control their braking and acceleration. Human drivers will still be present to steer the following lorries in the convoy.

will connect to the others wirelessly and control their braking and acceleration. Human drivers will still be present to steer the following lorries in the convoy.